A feature of the website I'm working on is being able to POST data to a remote server using a client certificate and TLS.

I have been given a p12 Client Certificate, and installed/added it to the system. The certificate is used in code as follows, (but only seems to work if installed to the system too):

using (var client = new CertificateWebClient("~/ccert.p12"))

{

client.UploadFile(endpoint, filepath);

}

public class CertificateWebClient : WebClient

{

private readonly string _certificateUri;

public CertificateWebClient(string certificateUri)

{

_certificateUri = certificateUri;

}

protected override WebRequest GetWebRequest(Uri address)

{

HttpWebRequest request = (HttpWebRequest)base.GetWebRequest(address);

ServicePointManager.ServerCertificateValidationCallback = delegate { return true; };

var clientCertificate = new X509Certificate(HostingEnvironment.MapPath(_certificateUri));

request.ClientCertificates.Add(clientCertificate);

return request;

}

}

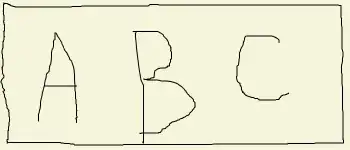

When debugging the application locally, I get the option to allow use of the certificate:

After installing the certificate on the server, I managed to run a small test program outside of IIS and it can use the certificate without any prompt.

I can't figure out how to get the site to use the certificate when running under an app pool in IIS.

Everything I have found so far has been about adding bindings so that certificates can be used for clients connecting to an application, rather than using client certificates to connect to other servers.

In case it makes any difference, this is for IIS7 on Server 2008 R2.