Assuming the static scene, with a single camera moving exactly sideways at small distance, there are two frames and a following computed optic flow (I use opencv's calcOpticalFlowFarneback):

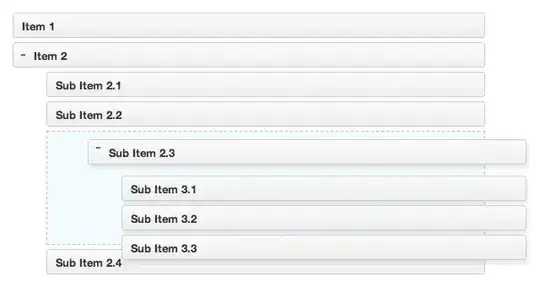

Here scatter points are detected features, which are painted in pseudocolor with depth values (red is little depth, close to the camera, blue is more distant). Now, I obtain those depth values by simply inverting optic flow magnitude, like d = 1 / flow. Seems kinda intuitive, in a motion-parallax-way - the brighter the object, the closer it is to the observer. So there's a cube, exposing a frontal edge and a bit of a side edge to the camera.

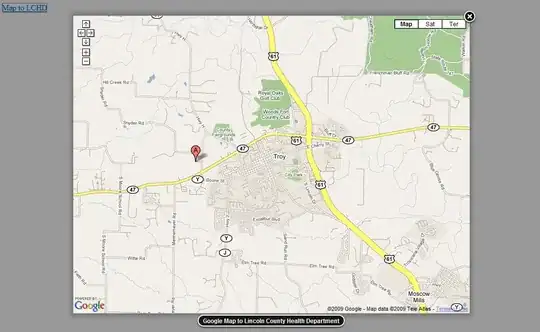

But then I'm trying to project those feature points from camera plane to the real-life coordinates to make a kind of top view map (where X = (x * d) / f and Y = d (where d is depth, x is pixel coordinate, f is focal length, and X and Y are real-life coordinates). And here's what I get:

Well, doesn't look cubic to me. Looks like the picture is skewed to the right. I've spent some time thinking about why, and it seems that 1 / flow is not an accurate depth metric. Playing with different values, say, if I use 1 / power(flow, 1 / 3), I get a better picture:

But, of course, power of 1 / 3 is just a magic number out of my head. The question is, what is the relationship between optic flow in depth in general, and how do I suppose to estimate it for a given scene? We're just considering camera translation here. I've stumbled upon some papers, but no luck trying to find a general equation yet. Some, like that one, propose a variation of 1 / flow, which isn't going to work, I guess.

Update

What bothers me a little is that simple geometry points me to 1 / flow answer too. Like, optic flow is the same (in my case) as disparity, right? Then using this formula I get d = Bf / (x2 - x1), where B is distance between two camera positions, f is focal length, x2-x1 is precisely the optic flow. Focal length is a constant, and B is constant for any two given frames, so that leaves me with 1 / flow again multiplied by a constant. Do I misunderstand something about what optic flow is?