I am trying to compute the average cell size on the following set of points, as seen on the picture:  . The picture was generated using gnuplot:

. The picture was generated using gnuplot:

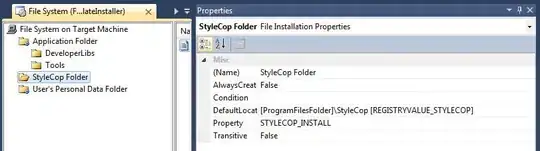

gnuplot> plot "debug.dat" using 1:2

The points are almost aligned on a rectangular grid, but not quite. There seems to be a bias (jitter?) of say 10-15% along either X or Y. How would one compute efficiently a proper partition in tiles so that there is virtually only one point per tile, size would be expressed as (tilex, tiley). I use the word virtually since the 10-15% bias may have moved a point in another adjacent tile.

Just for reference, I have manually sorted (hopefully correct) and extracted the first 10 points:

-133920,33480

-132480,33476

-131044,33472

-129602,33467

-128162,33463

-139679,34576

-138239,34572

-136799,34568

-135359,34564

-133925,34562

Just for clarification, a valid tile as per the above description would be (1435,1060), but I am really looking for a quick automated way.