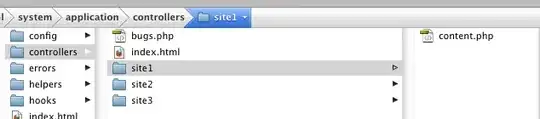

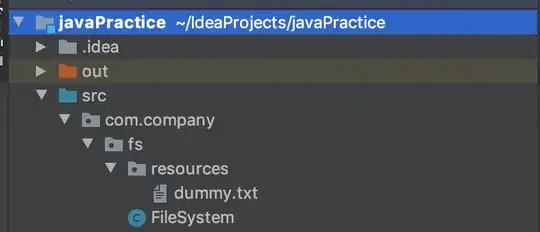

I have enhance the project job scheduling example from optaplanner examples with these features :

- Priority Based Project : execution order will be start from higher priority project

- Break Time Feature : adding another shadow variable named breakTime, and calculate it whenever an allocation overlap with break time (i.e. holiday)

- Change the value provider range for delay to 15000

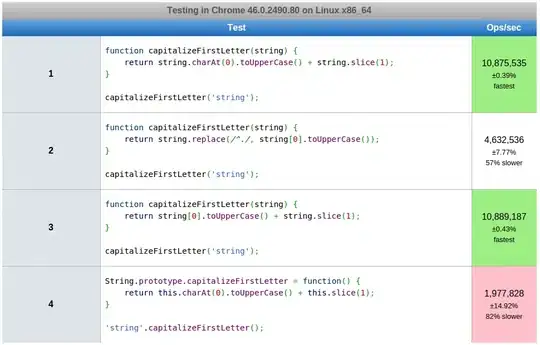

Then after that I run benchmark, and get the same result for LA 500, LA 1000, LA 2000, it state that all of them are the favorite. Is this the valid result from benchmark? Please someone help me analyze my bench mark result. I have attached my benchmark result. Thanks.