I am trying to implement deferred shading with OpenGL 4.4 on a NVIDIA GTX 970 with latest drivers installed.

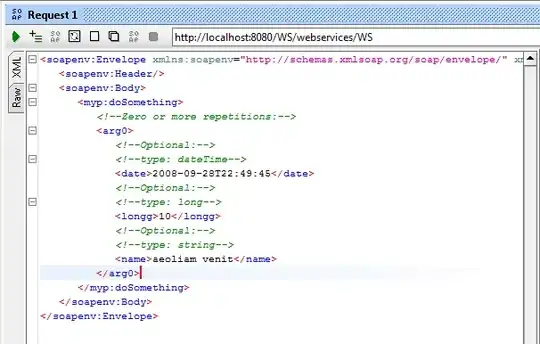

My code worked with rendering directly to screen. To add a second pass, I create a FBO to which I render my scene and a quad to which I render the final image. When I try to render nothing in the first pass and draw the quad after the second pass, the quad is visible. When I try to render a mesh (for example a cube) during the first pass, the quad disappears. Also I get the following error messages:

The mesh was loaded with AssImp.

I use the following code to create VBO / VAO:

void Mesh::genGPUBuffers()

{

glGenBuffers(1, &vertexbuffer);

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

glBufferData(GL_ARRAY_BUFFER, vertices.size() * sizeof(vec3), &vertices[0], GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

glGenBuffers(1, &uvbuffer);

glBindBuffer(GL_ARRAY_BUFFER, uvbuffer);

glBufferData(GL_ARRAY_BUFFER, uvs.size() * sizeof(vec2), &uvs[0], GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

glGenBuffers(1, &normalbuffer);

glBindBuffer(GL_ARRAY_BUFFER, normalbuffer);

glBufferData(GL_ARRAY_BUFFER, normals.size() * sizeof(vec3), &normals[0], GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

glGenBuffers(1, &indexbuffer);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexbuffer);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, indices.size() * sizeof(unsigned short), &indices[0], GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

And I render my mesh like this:

void Mesh::render()

{

if (shader != nullptr)

{

shader->use();

shader->setModelMatrix(modelMatrix);

}

for (int i = 0x1; i <= 0xB; i++)

{

if (texture[i] != nullptr)

{

glBindMultiTextureEXT(GL_TEXTURE0 + i, GL_TEXTURE_2D, texture[i]->getTextureID());

}

}

// 1rst attribute buffer : vertices

glEnableVertexAttribArray(0);

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

glVertexAttribPointer(

0, // attribute

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

// 2nd attribute buffer : UVs

glEnableVertexAttribArray(1);

glBindBuffer(GL_ARRAY_BUFFER, uvbuffer);

glVertexAttribPointer(

1, // attribute

2, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

// 3rd attribute buffer : normals

glEnableVertexAttribArray(2);

glBindBuffer(GL_ARRAY_BUFFER, normalbuffer);

glVertexAttribPointer(

2, // attribute

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

0, // stride

(void*)0 // array buffer offset

);

// Index buffer

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indexbuffer);

// Draw the triangles

glDrawElements(

GL_TRIANGLES, // mode

(GLsizei)indexCount, // count

GL_UNSIGNED_SHORT, // type

(void*)0 // element array buffer offset

);

glDisableVertexAttribArray(0);

glDisableVertexAttribArray(1);

glDisableVertexAttribArray(2);

}

With gDEBugger I found out that the error messages come from glVertexAttribPointer. But since gDEBugger does not support OpenGL 4, it throws a lot of errors itself and does not really work.

The FBO is generated like this:

GLuint depthBuf;

Texture

posTex(this),

normTex(this),

colorTex(this)

;

// Create and bind the FBO

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

// The depth buffer

glGenRenderbuffers(1, &depthBuf);

glBindRenderbuffer(GL_RENDERBUFFER, depthBuf);

glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT, static_cast<GLsizei>(resolution.x), static_cast<GLsizei>(resolution.y));

// Create the textures for position, normal and color

posTex.createGBuf(GL_TEXTURE0, GL_RGB32F, static_cast<int>(resolution.x), static_cast<int>(resolution.y));

normTex.createGBuf(GL_TEXTURE1, GL_RGB32F, static_cast<int>(resolution.x), static_cast<int>(resolution.y));

colorTex.createGBuf(GL_TEXTURE2, GL_RGB8, static_cast<int>(resolution.x), static_cast<int>(resolution.y));

// Attach the textures to the framebuffer

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_RENDERBUFFER, depthBuf);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, posTex.getTextureID(), 0);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT1, GL_TEXTURE_2D, normTex.getTextureID(), 0);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT2, GL_TEXTURE_2D, colorTex.getTextureID(), 0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

The createGBuf() function looks like this:

void C0::Texture::createGBuf(GLenum texUnit, GLenum format, int width, int height)

{

this->width = width;

this->height = height;

glActiveTexture(texUnit);

glGenTextures(1, &textureID);

glBindTexture(GL_TEXTURE_2D, textureID);

glTexStorage2D(GL_TEXTURE_2D, 1, format, width, height);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

}