The question I am trying to figure out is:

In this problem we consider the delay introduced by the TCP slow-start phase. Consider a client and a Web server directly connected by one link of rate R. Suppose the client wants to retrieve an object whose size is exactly equal to 15S, where S is the maximum segment size (MSS). Denote the round-trip time between client and server as RTT (assumed to be constant). Ignoring protocol headers, determine the time to retrieve the object (including TCP connection establishment) when

- 4S/R > S/R + RTT > 2S/R

- 8S/R > S/R + RTT > 4S/R

- S/R > RTT

I have the solution already (its a problem from a textbook), but I do not understand how they got to the answer.

- RTT + RTT + S/R + RTT + S/R + RTT + 12S/R = 4 · RTT + 14 · S/R

- RTT + RTT +S/R + RTT +S/R + RTT +S/R + RTT + 8S/R = 5 · RTT + 11 ·S/R

- RTT + RTT + S/R + RTT + 14S/R = 3 · RTT + 15 · S/R

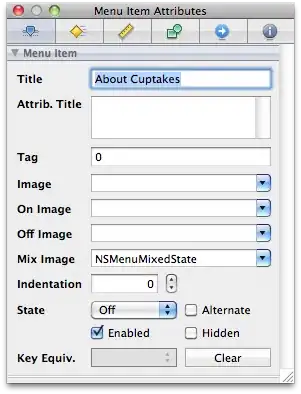

and here is the image that goes with the answer:

What kind of makes sense to me: Each of the scenarios is one where the RTT time is more or less than the time it takes to transmit a certain amount of segments. So for the first one, it takes somewhere between 3S/R and S/R seconds per RTT. From there I don't understand how slow-start is operating. I thought it just increases the window size for every acknowledged packet. But, for example in the solution to #1, Only two packets appear to be sent and ACKed and yet the window size jumps to 12S? What am I missing here?