I know, that mysql supports auto-increment values, but no dependent auto-increment values.

i.e. if you have a table like this:

id | element | innerId

1 | a | 1

2 | a | 2

3 | b | 1

And you insert another b-element, you need to compute the innerId on your own, (Excpected insert would be "2")

- Is there a database supporting something like this?

What would be the best way to achieve this behaviour? I do not know the number of elements, so i cannot create dedicated tables for them, where I just could derrive an id.

(The example is simplyfied)

The target that should be achieved, is that any element "type" (where the number is unknown, possibly infitine -1 should have it's own, gap-less id.

If I would use something like

INSERT INTO

myTable t1

(id,element, innerId)

VALUES

(null, 'b', (SELECT COUNT(*) FROM myTable t2 WHERE t2.element = "b") +1)

http://sqlfiddle.com/#!2/2f4543/1

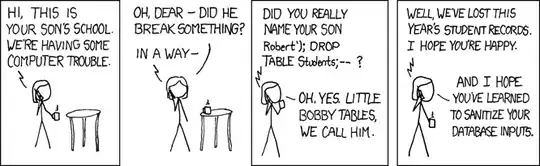

Will this return the expected result under all circumstances? I mean it works, but what about concurrency? Are Inserts with SubSelects still atomic or might there be a szenario, where two inserts will try to insert the same id? (Especially if a transactional insert is pending?)

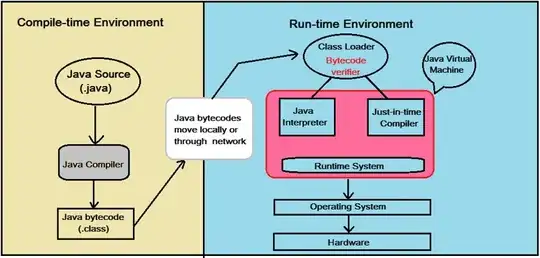

Would it be better to try to achieve this with the programming language (i.e. Java)? Or is it easier to implement this logic as close to the database engine as possible?

Since I'm using an aggregation to compute the next innerId, i think using SELECT...FOR UPDATE can not avoid the problem in case of other transactions having pending commits, right?

ps.: I could ofc. just bruteforce the insert - starting at the current max value per element - with a unique key constraint on (element,innerId) until there is no foreignKey-violation - but isn't there a nicer way?

According to Make one ID with auto_increment depending on another ID - possible? it would be possible with a composite primary key on - in my case - innerId and element. But according to this setting MySQL auto_increment to be dependent on two other primary keys that works only for MyIsam (I have InnoDB)

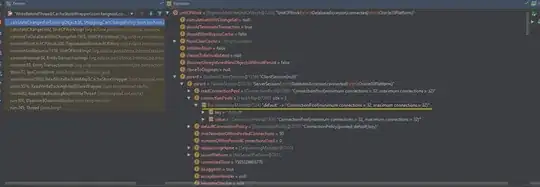

Now i'm confused even more. I tried to use 2 different php scripts to insert data, using the query above. While script one has a "sleep" for 15 seconds in order to allow me to call script two (which should simulate the concurrent modification) - The result was correct when using one query.

(ps.: mysql(?!i)-functions only for quick debugging)

Base Data:

Script 1:

mysql_query("START TRANSACTION");

mysql_query("INSERT INTO insertTest (id, element, innerId, fromPage)VALUES(null, 'a', (SELECT MAX(t2.innerID) FROM insertTest t2 WHERE element='a') +1, 'page1')");

sleep(15);

//mysql_query("ROLLBACK;");

mysql_query("COMMIT;");

Script 2:

//mysql_query("START TRANSACTION");

mysql_query("INSERT INTO insertTest (id, element, innerId, fromPage)VALUES(null, 'a', (SELECT MAX(t2.innerID) FROM insertTest t2 WHERE element='a') +1, 'page2')");

//mysql_query("COMMIT;");

I would have expected that the page2 insert would have happened before the page1 insert, cause it's running without any transaction. But in fact, the page1 insert happened FIRST, causing the second script to also be delayed for about 15 seconds...

(ignore the AC-Id, played around a bit)

When using Rollback on the first script, the second script is still delayed for 15 seconds, and then picking up the correct innerId:

So:

- Non-Transactional-Insert are blocked while a transaction is active.

- Inserts with subselects seem also to be blocked.

- So at the end it seems like a Insert with a subselect is an atomic operation? Or why would the

SELECTof the second page has been blocked otherwhise?

Using the selection and insert in seperate, non-transactional statements like this (on page 2, simulating the concurrent modification):

$nextId = mysql_query("SELECT MAX(t2.innerID) as a FROM insertTest t2 WHERE element='a'");

$nextId = mysql_fetch_array($nextId);

$nextId = $nextId["a"] +1;

mysql_query("INSERT INTO insertTest (id, element, innerId, fromPage)VALUES(null, 'a', $nextId, 'page2')");

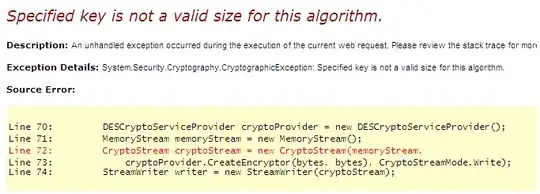

leads to the error I was trying to avoid:

so why does It work in the concurrent szenario when each modification is one query? Are inserts with subselects atomic?