First of all I am new bee on c and objective c

I try to fft a buffer of audio and plot the graph of it. I use audio unit callback to get audio buffer. the callback brings 512 frames but after 471 frames it brings 0. (I dont know this is normal or not. It used to bring 471 frames with full of numbers. but now somehow 512 frames with 0 after 471. Please let me know if this is normal)

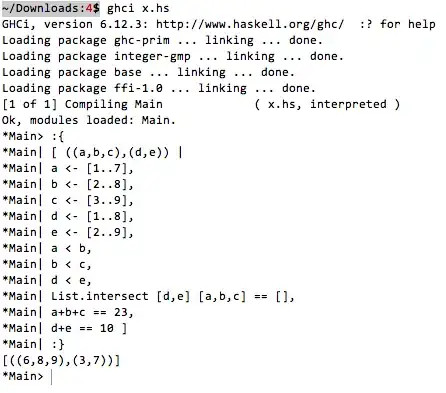

Anyway. I can get the buffer from the callback, apply fft and draw it . this works perfect. and here is the outcome below. the graph very smooth as long as I get buffer in each callback

but in my case I need 3 second of buffer in order to apply fft and draw. so I try to concatenate the buffers from two callback and then apply fft and draw it. but the result is not like what I expect . while the above one is very smooth and precise during record( only the magnitude change on the 18 and 19 khz), when I concatenate the two buffers, the simualator display mainly two different views that swapping between them very fast. they are displayed below. Of course they basically display 18 and 19 khz. but I need precise khz so I can apply more algorithms for the app I work on.

and here is my code in callback

//FFTInputBufferLen, FFTInputBufferFrameIndex is gloabal

//also tempFilteredBuffer is allocated in global

//by the way FFTInputBufferLen = 1024;

static OSStatus performRender (void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

UInt32 bus1 = 1;

CheckError(AudioUnitRender(effectState.rioUnit,

ioActionFlags,

inTimeStamp,

bus1,

inNumberFrames,

ioData), "Couldn't render from RemoteIO unit");

Float32 * renderBuff = ioData->mBuffers[0].mData;

ViewController *vc = (__bridge ViewController *) inRefCon;

// inNumberFrames comes 512 as I described above

for (int i = 0; i < inNumberFrames ; i++)

{

//I defined InputBuffers[5] in global.

//then added 5 Float32 InputBuffers and allocated in global

InputBuffers[bufferCount][FFTInputBufferFrameIndex] = renderBuff[i];

FFTInputBufferFrameIndex ++;

if(FFTInputBufferFrameIndex == FFTInputBufferLen)

{

int bufCount = bufferCount;

dispatch_async( dispatch_get_main_queue(), ^{

tempFilteredBuffer = [vc FilterData_rawSamples:InputBuffers[bufCount] numSamples:FFTInputBufferLen];

[vc CalculateFFTwithPlotting_Data:tempFilteredBuffer NumberofSamples:FFTInputBufferLen ];

free(InputBuffers[bufCount]);

InputBuffers[bufCount] = (Float32*)malloc(sizeof(Float32) * FFTInputBufferLen);

});

FFTInputBufferFrameIndex = 0;

bufferCount ++;

if (bufferCount == 5)

{

bufferCount = 0;

}

}

}

return noErr;

}

here is my AudioUnit setup

- (void)setupIOUnit

{

AudioComponentDescription desc;

desc.componentType = kAudioUnitType_Output;

desc.componentSubType = kAudioUnitSubType_RemoteIO;

desc.componentManufacturer = kAudioUnitManufacturer_Apple;

desc.componentFlags = 0;

desc.componentFlagsMask = 0;

AudioComponent comp = AudioComponentFindNext(NULL, &desc);

CheckError(AudioComponentInstanceNew(comp, &_rioUnit), "couldn't create a new instance of AURemoteIO");

UInt32 one = 1;

CheckError(AudioUnitSetProperty(_rioUnit, kAudioOutputUnitProperty_EnableIO, kAudioUnitScope_Input, 1, &one, sizeof(one)), "could not enable input on AURemoteIO");

// I removed this in order to not getting recorded audio back on speakers! Am I right?

//CheckError(AudioUnitSetProperty(_rioUnit, kAudioOutputUnitProperty_EnableIO, kAudioUnitScope_Output, 0, &one, sizeof(one)), "could not enable output on AURemoteIO");

UInt32 maxFramesPerSlice = 4096;

CheckError(AudioUnitSetProperty(_rioUnit, kAudioUnitProperty_MaximumFramesPerSlice, kAudioUnitScope_Global, 0, &maxFramesPerSlice, sizeof(UInt32)), "couldn't set max frames per slice on AURemoteIO");

UInt32 propSize = sizeof(UInt32);

CheckError(AudioUnitGetProperty(_rioUnit, kAudioUnitProperty_MaximumFramesPerSlice, kAudioUnitScope_Global, 0, &maxFramesPerSlice, &propSize), "couldn't get max frames per slice on AURemoteIO");

AudioUnitElement bus1 = 1;

AudioStreamBasicDescription myASBD;

myASBD.mSampleRate = 44100;

myASBD.mChannelsPerFrame = 1;

myASBD.mFormatID = kAudioFormatLinearPCM;

myASBD.mBytesPerFrame = sizeof(Float32) * myASBD.mChannelsPerFrame ;

myASBD.mFramesPerPacket = 1;

myASBD.mBytesPerPacket = myASBD.mFramesPerPacket * myASBD.mBytesPerFrame;

myASBD.mBitsPerChannel = sizeof(Float32) * 8 ;

myASBD.mFormatFlags = 9 | 12 ;

// I also remove this for not getting audio back!!

// CheckError(AudioUnitSetProperty (_rioUnit,

// kAudioUnitProperty_StreamFormat,

// kAudioUnitScope_Input,

// bus0,

// &myASBD,

// sizeof (myASBD)), "Couldn't set ASBD for RIO on input scope / bus 0");

CheckError(AudioUnitSetProperty (_rioUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Output,

bus1,

&myASBD,

sizeof (myASBD)), "Couldn't set ASBD for RIO on output scope / bus 1");

effectState.rioUnit = _rioUnit;

AURenderCallbackStruct renderCallback;

renderCallback.inputProc = performRender;

renderCallback.inputProcRefCon = (__bridge void *)(self);

CheckError(AudioUnitSetProperty(_rioUnit,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Input,

0,

&renderCallback,

sizeof(renderCallback)), "couldn't set render callback on AURemoteIO");

CheckError(AudioUnitInitialize(_rioUnit), "couldn't initialize AURemoteIO instance");

}

My questions are : why this happens, why there are two main different views on output when I concatenate the two buffers. is there another way to collect buffers and apply DSP? what do I do wrong! if the way I concatenate is correct, is my logic incorrect? (though I checked it many times)

Here I try to say : how can I get 3 sn of buffer in perfect condition

I really need help , best Regards