I think there are 2 main problems.

segment the glasses frame

find the thickness of the segmented frame

I'll now post a way to segment the glasses of your sample image. Maybe this method will work for different images too, but you'll probably have to adjust parameters, or you might be able to use the main ideas.

Main idea is:

First, find the biggest contour in the image, which should be the glasses. Second, find the two biggest contours within the previous found biggest contour, which should be the glasses within the frame!

I use this image as input (which should be your blurred but not dilated image):

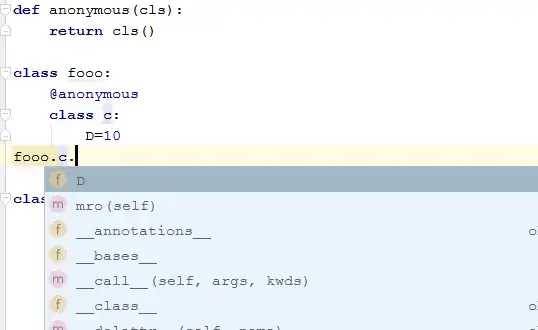

// this functions finds the biggest X contours. Probably there are faster ways, but it should work...

std::vector<std::vector<cv::Point>> findBiggestContours(std::vector<std::vector<cv::Point>> contours, int amount)

{

std::vector<std::vector<cv::Point>> sortedContours;

if(amount <= 0) amount = contours.size();

if(amount > contours.size()) amount = contours.size();

for(int chosen = 0; chosen < amount; )

{

double biggestContourArea = 0;

int biggestContourID = -1;

for(unsigned int i=0; i<contours.size() && contours.size(); ++i)

{

double tmpArea = cv::contourArea(contours[i]);

if(tmpArea > biggestContourArea)

{

biggestContourArea = tmpArea;

biggestContourID = i;

}

}

if(biggestContourID >= 0)

{

//std::cout << "found area: " << biggestContourArea << std::endl;

// found biggest contour

// add contour to sorted contours vector:

sortedContours.push_back(contours[biggestContourID]);

chosen++;

// remove biggest contour from original vector:

contours[biggestContourID] = contours.back();

contours.pop_back();

}

else

{

// should never happen except for broken contours with size 0?!?

return sortedContours;

}

}

return sortedContours;

}

int main()

{

cv::Mat input = cv::imread("../Data/glass2.png", CV_LOAD_IMAGE_GRAYSCALE);

cv::Mat inputColors = cv::imread("../Data/glass2.png"); // used for displaying later

cv::imshow("input", input);

//edge detection

int lowThreshold = 100;

int ratio = 3;

int kernel_size = 3;

cv::Mat canny;

cv::Canny(input, canny, lowThreshold, lowThreshold*ratio, kernel_size);

cv::imshow("canny", canny);

// close gaps with "close operator"

cv::Mat mask = canny.clone();

cv::dilate(mask,mask,cv::Mat());

cv::dilate(mask,mask,cv::Mat());

cv::dilate(mask,mask,cv::Mat());

cv::erode(mask,mask,cv::Mat());

cv::erode(mask,mask,cv::Mat());

cv::erode(mask,mask,cv::Mat());

cv::imshow("closed mask",mask);

// extract outermost contour

std::vector<cv::Vec4i> hierarchy;

std::vector<std::vector<cv::Point>> contours;

//cv::findContours(mask, contours, hierarchy, CV_RETR_TREE, CV_CHAIN_APPROX_SIMPLE);

cv::findContours(mask, contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

// find biggest contour which should be the outer contour of the frame

std::vector<std::vector<cv::Point>> biggestContour;

biggestContour = findBiggestContours(contours,1); // find the one biggest contour

if(biggestContour.size() < 1)

{

std::cout << "Error: no outer frame of glasses found" << std::endl;

return 1;

}

// draw contour on an empty image

cv::Mat outerFrame = cv::Mat::zeros(mask.rows, mask.cols, CV_8UC1);

cv::drawContours(outerFrame,biggestContour,0,cv::Scalar(255),-1);

cv::imshow("outer frame border", outerFrame);

// now find the glasses which should be the outer contours within the frame. therefore erode the outer border ;)

cv::Mat glassesMask = outerFrame.clone();

cv::erode(glassesMask,glassesMask, cv::Mat());

cv::imshow("eroded outer",glassesMask);

// after erosion if we dilate, it's an Open-Operator which can be used to clean the image.

cv::Mat cleanedOuter;

cv::dilate(glassesMask,cleanedOuter, cv::Mat());

cv::imshow("cleaned outer",cleanedOuter);

// use the outer frame mask as a mask for copying canny edges. The result should be the inner edges inside the frame only

cv::Mat glassesInner;

canny.copyTo(glassesInner, glassesMask);

// there is small gap in the contour which unfortunately cant be closed with a closing operator...

cv::dilate(glassesInner, glassesInner, cv::Mat());

//cv::erode(glassesInner, glassesInner, cv::Mat());

// this part was cheated... in fact we would like to erode directly after dilation to not modify the thickness but just close small gaps.

cv::imshow("innerCanny", glassesInner);

// extract contours from within the frame

std::vector<cv::Vec4i> hierarchyInner;

std::vector<std::vector<cv::Point>> contoursInner;

//cv::findContours(glassesInner, contoursInner, hierarchyInner, CV_RETR_TREE, CV_CHAIN_APPROX_SIMPLE);

cv::findContours(glassesInner, contoursInner, hierarchyInner, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

// find the two biggest contours which should be the glasses within the frame

std::vector<std::vector<cv::Point>> biggestInnerContours;

biggestInnerContours = findBiggestContours(contoursInner,2); // find the one biggest contour

if(biggestInnerContours.size() < 1)

{

std::cout << "Error: no inner frames of glasses found" << std::endl;

return 1;

}

// draw the 2 biggest contours which should be the inner glasses

cv::Mat innerGlasses = cv::Mat::zeros(mask.rows, mask.cols, CV_8UC1);

for(unsigned int i=0; i<biggestInnerContours.size(); ++i)

cv::drawContours(innerGlasses,biggestInnerContours,i,cv::Scalar(255),-1);

cv::imshow("inner frame border", innerGlasses);

// since we dilated earlier and didnt erode quite afterwards, we have to erode here... this is a bit of cheating :-(

cv::erode(innerGlasses,innerGlasses,cv::Mat() );

// remove the inner glasses from the frame mask

cv::Mat fullGlassesMask = cleanedOuter - innerGlasses;

cv::imshow("complete glasses mask", fullGlassesMask);

// color code the result to get an impression of segmentation quality

cv::Mat outputColors1 = inputColors.clone();

cv::Mat outputColors2 = inputColors.clone();

for(int y=0; y<fullGlassesMask.rows; ++y)

for(int x=0; x<fullGlassesMask.cols; ++x)

{

if(!fullGlassesMask.at<unsigned char>(y,x))

outputColors1.at<cv::Vec3b>(y,x)[1] = 255;

else

outputColors2.at<cv::Vec3b>(y,x)[1] = 255;

}

cv::imshow("output", outputColors1);

/*

cv::imwrite("../Data/Output/face_colored.png", outputColors1);

cv::imwrite("../Data/Output/glasses_colored.png", outputColors2);

cv::imwrite("../Data/Output/glasses_fullMask.png", fullGlassesMask);

*/

cv::waitKey(-1);

return 0;

}

I get this result for segmentation:

the overlay in original image will give you an impression of quality:

and inverse:

There are some tricky parts in the code and it's not tidied up yet. I hope it's understandable.

The next step would be to compute the thickness of the the segmented frame. My suggestion is to compute the distance transform of the inversed mask. From this you will want to compute a ridge detection or skeletonize the mask to find the ridge. After that use the median value of ridge distances.

Anyways I hope this posting can help you a little, although it's not a solution yet.