I'm running k-means on a big data set. I set it up like this:

from sklearn.cluster import KMeans

km = KMeans(n_clusters=500, max_iter = 1, n_init=1,

init = 'random', precompute_distances = 0, n_jobs = -2)

# The following line computes the fit on a matrix "mat"

km.fit(mat)

My machine has 8 cores. The documentation says "for n_jobs = -2, all CPUs but one are used." I can see that there several extra Python processes running while km.fit is executing, but only one CPU gets used.

Does this sound like a GIL issue? If so, is there any way to get all CPU's to work? (It seems like there must be ... otherwise what is the point of the n_jobs argument).

I'm guessing I'm missing something basic and someone can either confirm my fear or get me back on track; if it's actually more involved, I'll turn to setting up a working example.

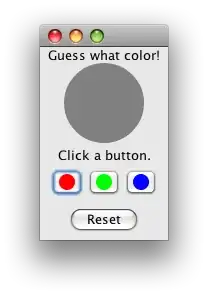

Update 1. For simplicity, I switched n_jobs to be positive 2. Here is what's going on with my system during execution:

Actually I'm not the only user on the machine, but

free | grep Mem | awk '{print $3/$2 * 100.0}'

indicates that 88% of RAM is free (confusing to me, since the RAM usage looks like at least 27% on the screenshot above).

Update 2. I updated sklearn version to 0.15.2, and nothing changed in the top output reported above. Experimenting with different values of n_jobs similarly gives no improvement.