I'm trying to capture the contents of the display and convert from the display's colorspace to sRGB. I'm trying to use vImage and the low level ColorSync transform APIs to do this, but the output has a weird doubling of pixel blocks. My ColorSyncTransformRef seems to be right as ColorSyncTransformConvert() works, but the Accelerate framework version using vImage does not. Has anyone seen this before and knows how to fix it? Thanks!

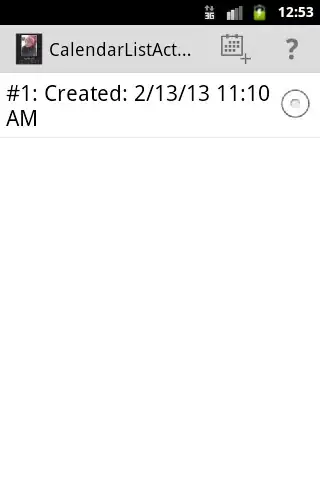

Here is what the output looks like, you can see the weird doubling:

Here is the code I'm using:

CGImageRef screenshot = CGDisplayCreateImage(CGMainDisplayID());

CFDataRef rawData = CGDataProviderCopyData(CGImageGetDataProvider(screenshot));

uint8_t *basePtr = (uint8_t *)CFDataGetBytePtr(rawData);

const void *keys[] = {kColorSyncProfile, kColorSyncRenderingIntent, kColorSyncTransformTag};

ColorSyncProfileRef srcProfile = ColorSyncProfileCreateWithDisplayID(CGMainDisplayID());

ColorSyncProfileRef destProfile = ColorSyncProfileCreateWithName(kColorSyncSRGBProfile);

const void *srcVals[] = {srcProfile, kColorSyncRenderingIntentPerceptual, kColorSyncTransformDeviceToPCS};

const void *dstVals[] = {destProfile, kColorSyncRenderingIntentPerceptual, kColorSyncTransformPCSToDevice};

CFDictionaryRef srcDict = CFDictionaryCreate (

NULL,

(const void **)keys,

(const void **)srcVals,

3,

&kCFTypeDictionaryKeyCallBacks,

&kCFTypeDictionaryValueCallBacks);

CFDictionaryRef dstDict = CFDictionaryCreate (

NULL,

(const void **)keys,

(const void **)dstVals,

3,

&kCFTypeDictionaryKeyCallBacks,

&kCFTypeDictionaryValueCallBacks);

const void* arrayVals[] = {srcDict, dstDict, NULL};

CFArrayRef profileSequence = CFArrayCreate(NULL, (const void **)arrayVals, 2, &kCFTypeArrayCallBacks);

CFTypeRef codeFragment = NULL;

/* transform to be used for converting color */

ColorSyncTransformRef transform = ColorSyncTransformCreate(profileSequence, NULL);

/* get the code fragment specifying the full conversion */

codeFragment = ColorSyncTransformCopyProperty(transform, kColorSyncTransformFullConversionData, NULL);

if (transform) CFRelease (transform);

if (codeFragment) CFShow(codeFragment);

vImage_Error err;

vImage_CGImageFormat inFormat = {

.bitsPerComponent = 8,

.bitsPerPixel = 32,

.colorSpace = CGColorSpaceCreateWithPlatformColorSpace(srcProfile),

.bitmapInfo = kCGImageAlphaNoneSkipFirst | kCGBitmapByteOrder32Little,

}; // .version, .renderingIntent and .decode all initialized to 0 per C rules

vImage_CGImageFormat outFormat = {

.bitsPerComponent = 8,

.bitsPerPixel = 32,

.colorSpace = CGColorSpaceCreateWithPlatformColorSpace(destProfile),

.bitmapInfo = kCGImageAlphaNoneSkipFirst | kCGBitmapByteOrder32Little,

}; // .version, .renderingIntent and .decode all initialized to 0 per C rules

/* create a converter to do the image format conversion. */

vImageConverterRef converter = vImageConverter_CreateWithColorSyncCodeFragment(codeFragment, &inFormat, &outFormat, NULL, kvImagePrintDiagnosticsToConsole, &err );

NSAssert(err == kvImageNoError, @"Unable to setup the colorsync transform!");

/* Check to see if the converter will work in place. */

vImage_Error outOfPlace = vImageConverter_MustOperateOutOfPlace(converter, NULL, NULL, kvImageNoFlags);

NSAssert(!outOfPlace, @"We expect the image convert to work in place");

if (srcDict) CFRelease (srcDict);

if (dstDict) CFRelease (dstDict);

if (profileSequence) CFRelease (profileSequence);

// ColorSync converter time!

size_t width = CGImageGetWidth(screenshot);

size_t height = CGImageGetHeight(screenshot);

vImage_Buffer buf;

err = vImageBuffer_Init( &buf, height, width, 32, kvImageNoFlags );

NSAssert(err == kvImageNoError, @"Failed to init buffer");

memcpy(buf.data, basePtr, width * height * 4);

vImage_Buffer vimg_src = { buf.data, height, width, width * 4 };

vImage_Buffer vimg_dest = { buf.data, height, width, width * 4 };

vImage_Error colorSyncErr = vImageConvert_AnyToAny(converter, &vimg_src, &vimg_dest, NULL, kvImageNoFlags );

NSAssert(colorSyncErr == kvImageNoError, @"ColorSync conversion failed");

NSData *pngData = AOPngDataForBuffer(buf.data, (int)width, (int)height);

[pngData writeToFile:@"/tmp/out.png" atomically:YES];

self.imageView.image = [[NSImage alloc] initWithData:pngData];

free(buf.data);

CFRelease(rawData);