Recently I started thinking about implementing Levenberg-Marquardt algorithm for learning an Artificial Neural Network (ANN). The key to the implementation is to compute a Jacobian matrix. I spent a couple hours studying the topic, but I can't figure out how to compute it exactly.

Say I have a simple feed-forward network with 3 inputs, 4 neurons in the hidden layer and 2 outputs. Layers are fully connected. I also have 5 rows long learning set.

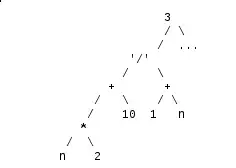

- What exactly should be the size of the Jacobian matrix?

- What exactly should I put in place of the derivatives? (Examples of the formulas for the top-left, and bottom-right corners along with some explanation would be perfect)

This really doesn't help:

What are F and x in terms of a neural network?