I'm using a Windows Azure Virtual Machine with Windows Server 2012 Datacenter, on a D2 instance (The new SSD instances), to unzip a 1.8 GB zip file, that contains a 51 GB XML file unzipped. Needless to say, this process can be sped up with a fast disk, which is the reason that I'm testing a D2 instance.

However, the disk performance I'm getting is not impressive, and does not live up to the performacne expectation of a SSD disk, as I'm only getting around 20-30 MB/s in write speed on average.

The program I'm using to unzip the file is a custom .NET console app developed for this sole purpose. The source code is as follows:

static void Main(string[] args)

{

if (args.Count() < 1)

{

Console.WriteLine("Missing file parameter.");

return;

}

string zipFilePath = args.First();

if (!File.Exists(zipFilePath))

{

Console.WriteLine("File does not exist.");

return;

}

string targetPath = Path.GetDirectoryName(zipFilePath);

var start = DateTime.Now;

Console.WriteLine("Starting extraction (" + start.ToLongTimeString() + ")");

var zipFile = new ZipFile(zipFilePath);

zipFile.UseZip64 = UseZip64.On;

foreach (ZipEntry zipEntry in zipFile)

{

byte[] buffer = new byte[4096]; // 4K is optimum

Stream zipStream = zipFile.GetInputStream(zipEntry);

String entryFileName = zipEntry.Name;

Console.WriteLine("Extracting " + entryFileName + " ...");

String fullZipToPath = Path.Combine(targetPath, entryFileName);

string directoryName = Path.GetDirectoryName(fullZipToPath);

if (directoryName.Length > 0)

{

Directory.CreateDirectory(directoryName);

}

// Unzip file in buffered chunks. This is just as fast as unpacking to a buffer the full size

// of the file, but does not waste memory.

// The "using" will close the stream even if an exception occurs.

long dataWritten = 0;

long dataWrittenSinceLastOutput = 0;

const long dataOutputThreshold = 100 * 1024 * 1024; // 100 mb

var timer = System.Diagnostics.Stopwatch.StartNew();

using (FileStream streamWriter = File.Create(fullZipToPath))

{

bool moreDataAvailable = true;

while (moreDataAvailable)

{

int count = zipStream.Read(buffer, 0, buffer.Length);

if (count > 0)

{

streamWriter.Write(buffer, 0, count);

dataWritten += count;

dataWrittenSinceLastOutput += count;

if (dataWrittenSinceLastOutput > dataOutputThreshold)

{

timer.Stop();

double megabytesPerSecond = (dataWrittenSinceLastOutput / timer.Elapsed.TotalSeconds) / 1024 / 1024;

Console.WriteLine(dataWritten.ToString("#,0") + " bytes written (" + megabytesPerSecond.ToString("#,0.##") + " MB/s)");

dataWrittenSinceLastOutput = 0;

timer.Restart();

}

}

else

{

streamWriter.Flush();

moreDataAvailable = false;

}

}

Console.WriteLine(dataWritten.ToString("#,0") + " bytes written");

}

}

zipFile.IsStreamOwner = true; // Makes close also shut the underlying stream

zipFile.Close(); // Ensure we release resources

Console.WriteLine("Done. (Time taken: " + (DateTime.Now - start).ToString() +")");

Console.ReadKey();

}

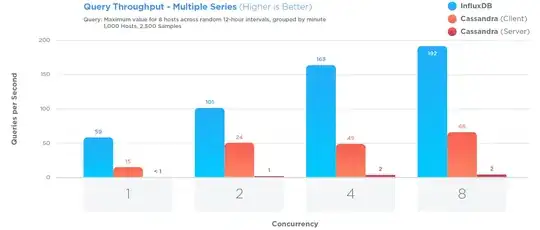

When running this application locally on my own machine with a SSD disk, I get 180-200 MB/s in performance constantly throughout the entire unzipping process. But when I run it on the Azure VM, I get good performance (100-150 MB/s) the first 10 seconds approximately, and then it declines to around 20 MB/s and stays there, with periodic further decline to 8-9 MB/s. It doesn't improve. The whole unzipping process takes around 42 minutes on the Azure VM, while my local machine can do it in about 10 minutes.

What's going on here? Why is the disk performance so bad? Is it my application that's doing something wrong?

Both locally and on the Azure VM, the zip file is placed on the SSD disk, and the file is extracted to the same SSD disk. (On the Azure VM, I'm using the Temporary Storage drive, as that is the SSD)

Here is a screenshot from the Azure VM extracting the file:

Notice how the performance is great at the start, but then suddenly declines and doesn't recover. My guess is that there is some caching going on, and then the performance drops when the cache misses.

Here is a screenshot from my local machine extracting the file:

The performance varies a bit but remains above 160 MB/s.

It's the same binary I'm using on both machines, which is compiled for x64 (Not AnyCPU). The SSD disk I have in my machine is around 1,5 years old, so it's not anything new or special. I don't think it's a memory issue either, as the D2 instance have around 7 GB of RAM, while my local machine has 12 GB. But 7 GB should be sufficient, shouldn't it?

Does anyone have any clue as to what is going on?

Thank you so much for any help.

Added

I tried monitoring the memory usage while doing the extraction, and what I noticed was that when the application started, the amount of Modified memory exploded and just kept growing. While it did that, the performance reported by my application was great (100+ MB/s). Then the Modified memory started to shrink (Which, as far as I know, means the memory is being flushed to disk), the performance declined immediately to 20-30 MB/s. A few times, the performance actually improved, and I could see that when it did, the Modified memory usage increased. Moments later, the performance declined again, and I could see that the amount of Modified memory decreased. So it seems the flushing of data to the disk is causing my application performance problems. But why? And how can I solve this?

Added

Okay, so I tried David's suggestion and ran the application on a D14 instance, and I got really good disk performance now, steady 180-200+ MB/s. I'll continue testing on different sizes of instances and see how low I can go and still get good disk performance. It still just seems weird that I got so lousy disk performance on a VM with a local SSD disk, as I got with the D2 instance.