I have read a lot about indexing and differences between them. Now i am working on query optimization in my project. I have created nonclustered index, that should be used on query execution, but that is not the case. Details below:

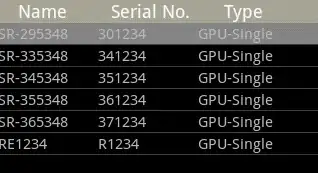

Table:

Index:

CREATE NONCLUSTERED INDEX [_IXProcedure_Deleted_Date] ON [por].[DailyAsset]

(

[Deleted] ASC,

[Date] DESC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

GO

Query generated by Entity Framework:

exec sp_executesql N'SELECT

[Project1].[C1] AS [C1],

[Project1].[AssetId] AS [AssetId],

[Project1].[Active] AS [Active],

[Project1].[Date] AS [Date]

FROM ( SELECT

[Extent1].[AssetId] AS [AssetId],

[Extent1].[Active] AS [Active],

[Extent1].[Date] AS [Date],

1 AS [C1]

FROM [por].[DailyAsset] AS [Extent1]

WHERE (0 = [Extent1].[Deleted]) AND ([Extent1].[Date] < @p__linq__0)

) AS [Project1]

ORDER BY [Project1].[Date] DESC',N'@p__linq__0 datetime2(7)',@p__linq__0='2014-05-01 00:00:00'

Execution plan:

Missing index details:

The Query Processor estimates that implementing the following index could improve the query cost by 23.8027%.

CREATE NONCLUSTERED INDEX [<Name of Missing Index, sysname,>]

ON [por].[DailyAsset] ([Deleted],[Date])

INCLUDE ([AssetId],[Active])

I am aware that with inclusion of AssetId and Active columns to index, index will be used.

Now, why it is not working without column inclusion?

This is simplified example of another queries, where all columns are fetched as a result. The only solution to (force) index seek usage, is to include all columns in index, which has the same Estimated Subtree cost (obvious).

Another annoying issue here is Sort ignorance. Date columns is in index and set to DESCENDING. It is completly ignored, and ofcourse, Sort operations takes expensive place in execution plan.

UPDATE 1:

As @Jayachandran pointed out, IndexSeek + KeyLookUp should be used in query above, but covering index is well documented, and it assumes that AssetId and Active columns should be included. I agree with that.

I am creating UPDATE 1 to demonstrate covering index usefulness in query below. Same table, larger result set. As far as i can figure it out, not a single columns should not be used in index, and index stays created for Date and Deleted columns.

exec sp_executesql N'SELECT

[Project1].[DailyAssetId] AS [DailyAssetId],

[Project1].[AssetId] AS [AssetId],

[Project1].[CreatedByUserId] AS [CreatedByUserId],

[Project1].[UpdatedByUserId] AS [UpdatedByUserId],

[Project1].[TimeCreated] AS [TimeCreated],

[Project1].[TimeUpdated] AS [TimeUpdated],

[Project1].[Deleted] AS [Deleted],

[Project1].[TimeDeleted] AS [TimeDeleted],

[Project1].[DeletedByUserId] AS [DeletedByUserId],

[Project1].[Active] AS [Active],

[Project1].[Date] AS [Date],

[Project1].[Quantity] AS [Quantity],

[Project1].[TotalBookValue] AS [TotalBookValue],

[Project1].[CostPrice] AS [CostPrice],

[Project1].[CostValue] AS [CostValue],

[Project1].[FairPrice] AS [FairPrice],

[Project1].[FairValue] AS [FairValue],

[Project1].[UnsettledQuantity] AS [UnsettledQuantity],

[Project1].[UnsettledValue] AS [UnsettledValue],

[Project1].[SettlementDate] AS [SettlementDate],

[Project1].[EffectiveDate] AS [EffectiveDate],

[Project1].[PortfolioId] AS [PortfolioId]

FROM ( SELECT

[Extent1].[DailyAssetId] AS [DailyAssetId],

[Extent1].[AssetId] AS [AssetId],

[Extent1].[CreatedByUserId] AS [CreatedByUserId],

[Extent1].[UpdatedByUserId] AS [UpdatedByUserId],

[Extent1].[TimeCreated] AS [TimeCreated],

[Extent1].[TimeUpdated] AS [TimeUpdated],

[Extent1].[Deleted] AS [Deleted],

[Extent1].[TimeDeleted] AS [TimeDeleted],

[Extent1].[DeletedByUserId] AS [DeletedByUserId],

[Extent1].[Active] AS [Active],

[Extent1].[Date] AS [Date],

[Extent1].[Quantity] AS [Quantity],

[Extent1].[TotalBookValue] AS [TotalBookValue],

[Extent1].[CostPrice] AS [CostPrice],

[Extent1].[CostValue] AS [CostValue],

[Extent1].[FairPrice] AS [FairPrice],

[Extent1].[FairValue] AS [FairValue],

[Extent1].[UnsettledQuantity] AS [UnsettledQuantity],

[Extent1].[UnsettledValue] AS [UnsettledValue],

[Extent1].[SettlementDate] AS [SettlementDate],

[Extent1].[EffectiveDate] AS [EffectiveDate],

[Extent1].[PortfolioId] AS [PortfolioId]

FROM [por].[DailyAsset] AS [Extent1]

WHERE (0 = [Extent1].[Deleted]) AND ([Extent1].[Date] < @p__linq__0)

) AS [Project1]

ORDER BY [Project1].[Date] DESC',N'@p__linq__0 datetime2(7)',@p__linq__0='2014-05-01 00:00:00'