This morning, with some struggles (see: Upgrading a neo4j database from 2.0.1 to 2.1.3 fails), i upgraded my database from version 2.0.1 to 2.1.3. My main goal with the upgrade was to gain performance on certain queries (see: Cypher SORT performance).

Everything seems to be working, except for the fact that all Cypher queries - without exception - have become much, much, much slower. Queries that used to take 75ms now take nearly 2000ms.

As i was running on an A1 (1xCPU ~2GB RAM) VM in Azure, i thought that giving neo4j some more ram and an extra core would help, but after upgrading to an A2 VM i get more or less the same results.

I'm no wondering, did i loose my indexes by doing a backup and upgrading/using that db? I have perhaps 50K nodes in my db, so it's not that spectacular, right?

I'm now still running on an A2 VM (2xCPU, ~4GB RAM), but had to downgrade to 2.0.1 again.

UPDATE: #1 2014-08-12

After reading Michael's first comment, on how to inspect my indexes using the shell, i did the following:

- With my 2.0.1 database service running (and performing well), i executed

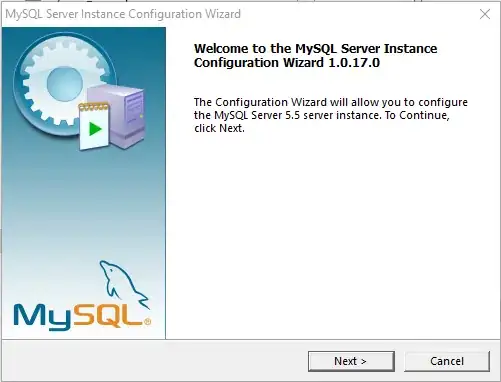

Neo4jShell.batand then executed theSchemacommand. This yielded the following response:

- I uninstalled the 2.0.1 service using the

Neo4jInstall.bat removecommand. - I installed the 2.1.3 service using the

Neo4jInstall installcommand. - With my 2.1.3 database service running, I again executed the

Neo4jShell.batand then executed theschemacommand. This yielded the following response:

I think it is safe to conclude that either the migration process (in 2.1.3) or the backup process (in 2.0.1) has removed the indexes from my database. This does explain why my backed up database is much smaller (~110MB) than the online database (~380MB). After migration to 2.1.3, my database became even smaller (~90MB).

Question is now, is it just a matter of recreating my indexes and be done with it?

UPDATE: #2 2014-08-12

I guess i have answered my own question. After recreating the constraints and indexes, my queries perform like they used to (some even faster, as expected).