I am trying logistic regression using gradient descent with two data set, I get different result for each of them.

Dataset1 Input

X=

1 2 3

1 4 6

1 7 3

1 5 5

1 5 4

1 6 4

1 3 4

1 4 5

1 1 2

1 3 4

1 7 7

Y=

0

1

1

1

0

1

0

0

0

0

1

Dataset2 input-

x =

1 20 30

1 40 60

1 70 30

1 50 50

1 50 40

1 60 40

1 30 40

1 40 50

1 10 20

1 30 40

1 70 70

y =

0

1

1

1

0

1

0

0

0

0

1

The difference in dataset 1 and dataset2 is only the range of values. When I run my common code for both the data set,, My code gives desired output for dataset one but very weird idea for dataset 2.

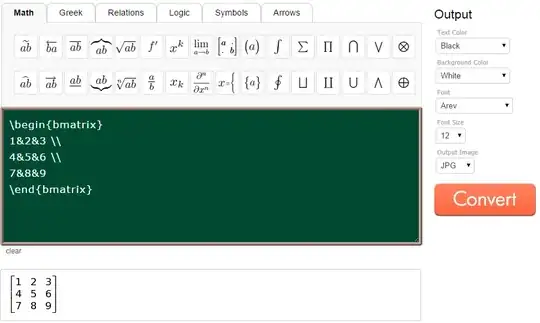

My code goes as follow:

[m,n]=size(x);

x=[ones(m,1),x];

X=x;

%3. In this step we will plot the graph for the given input data set just to see how is the distribution of the two class.

pos = find(y == 1); % This will take the postion or array number from y for all the class that has value 1

neg = find(y == 0); % Similarly this will take the position or array number from y for all class that has value 0

% Now we plot the graph column x1 Vs x2 for y=1 and y=0

plot(X(pos, 2), X(pos,3), '+');

hold on

plot(X(neg, 2), X(neg, 3), 'o');

xlabel('x1 marks in subject 1')

ylabel('y1 marks in subject 2')

legend('pass', 'Failed')

hold off

% Now we limit the x1 and x2 we need to leave or skip the first column x0 because they should stay as 1.

% The critical thing hear to know is that this is not a linear regression but logistic regression, hence the h(hypothesis varies)

% So we calculate the hypothesis that is based on e

% j_theta will be calculated upon all the training set for 1st iteration

%

g=inline('1.0 ./ (1.0 + exp(-z))');

alpha=1;

theta = zeros(size(x(1,:)))'; % the theta has to be a 3*1 matrix so that it can multiply by x that is m*3 matrix

max_iter=2000;

j_theta=zeros(max_iter,1); % j is a zero matrix that is used to store the theta cost function j(theta)

for num_iter=1:max_iter

% Now we calculate the hx or hypothetis, It is calculated here inside no. of iteration because the hupothesis has to be calculated for new theta for every iteration

z=x*theta;

h=g(z); % Here the effect of inline function we used earlier will reflect

h

j_theta(num_iter)=(1/m)*(-y'* log(h) - (1 - y)'*log(1-h)) ; % This formula is the vectorized form of the cost function J(theta) This calculates the cost function

j_theta

theta = theta - (alpha/m) * x' * (1./(1+exp(-x*theta)) - y);

%grad=(1/m) * x' * (h-y); % This formula is the gradient descent formula that calculates the theta value.

%theta=theta - alpha .* grad; % Actual Calculation for theta

theta

end

figure

plot(0:1999, j_theta(1:2000), 'b', 'LineWidth', 2)

hold off

figure

%3. In this step we will plot the graph for the given input data set just to see how is the distribution of the two class.

pos = find(y == 1); % This will take the postion or array number from y for all the class that has value 1

neg = find(y == 0); % Similarly this will take the position or array number from y for all class that has value 0

% Now we plot the graph column x1 Vs x2 for y=1 and y=0

plot(X(pos, 2), X(pos,3), '+');

hold on

plot(X(neg, 2), X(neg, 3), 'o');

xlabel('x1 marks in subject 1')

ylabel('y1 marks in subject 2')

legend('pass', 'Failed')

plot_x = [min(X(:,2))-2, max(X(:,2))+2]; % This min and max decides the length of the decision graph.

% Calculate the decision boundary line

plot_y = (-1./theta(3)).*(theta(2).*plot_x +theta(1));

plot(plot_x, plot_y)

hold off

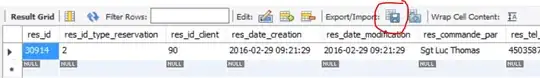

Please find the graph for each data sets as follow:

For data set 1:

For dataset2:

As you can see data set one gives me correct answer.

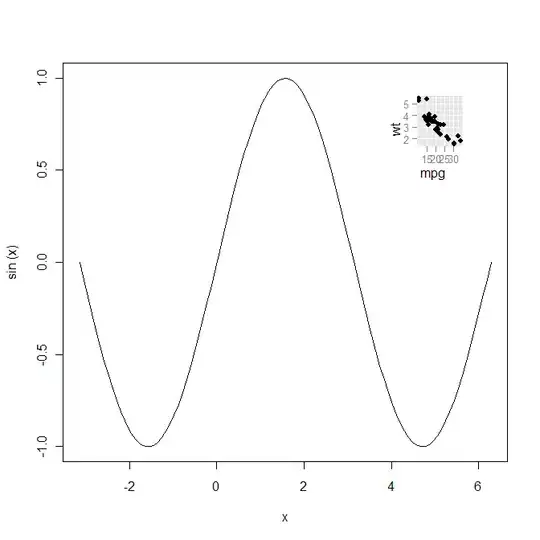

Having said that I believe the datsaet2 has wide range of data probably 10-100, Hence to normalize it I used feature scaling with the dataset2 and got the graph. The decision line formed was correct but a bit below the expected place, see it for yourself.

Dataset2 input with feature scaling:

x =

1.00000 -1.16311 -0.89589

1.00000 -0.13957 1.21585

1.00000 1.39573 -0.89589

1.00000 0.37219 0.51194

1.00000 0.37219 -0.19198

1.00000 0.88396 -0.19198

1.00000 -0.65134 -0.19198

1.00000 -0.13957 0.51194

1.00000 -1.67487 -1.59981

1.00000 -0.65134 -0.19198

1.00000 1.39573 1.91977

y =

0

1

1

1

0

1

0

0

0

0

1

The graph I get after adding feature scaling to my previous code is given below

As you can see if the decision line was a bit up then I would have got the perfect output..

Please help me understand the scenario, why even feature scaling cant help. or if my code has some error,, or if I am missing anything.