I'm working on a demo and the code is simple:

# The Config

class Config:

BROKER_URL = 'redis://127.0.0.1:6379/0'

CELERY_RESULT_BACKEND = 'redis://127.0.0.1:6379/0'

CELERY_ACCEPT_CONTENT = ['application/json']

# The Task

@celery_app.task()

def add(x, y):

return x + y

To start the worker:

$ celery -A appl.task.celery_app worker --loglevel=info -broker=redis://localhost:6379/0

-------------- celery@ALBERTATMP v3.1.13 (Cipater)

---- **** -----

--- * *** * -- Linux-3.2.0-4-amd64-x86_64-with-debian-7.6

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: celery_test:0x293ffd0

- ** ---------- .> transport: redis://localhost:6379/0

- ** ---------- .> results: disabled

- *** --- * --- .> concurrency: 2 (prefork)

-- ******* ----

--- ***** ----- [queues]

-------------- .> celery exchange=celery(direct) key=celery

To schedule task:

>>> from appl.task import add

>>> r = add.delay(1, 2)

>>> r.id

'c41d4e22-ccea-408f-b48f-52e3ddd6bd66'

>>> r.task_id

'c41d4e22-ccea-408f-b48f-52e3ddd6bd66'

>>> r.status

'PENDING'

>>> r.backend

<celery.backends.redis.RedisBackend object at 0x1f35b10>

Then the worker will execute the task:

[2014-07-29 17:54:37,356: INFO/MainProcess] Received task: appl.task.add[beeef023-c582-42e1-baf7-9e19d9de32a0]

[2014-07-29 17:54:37,358: INFO/MainProcess] Task appl.task.add[beeef023-c582-42e1-baf7-9e19d9de32a0] succeeded in 0.00108124599865s: 3

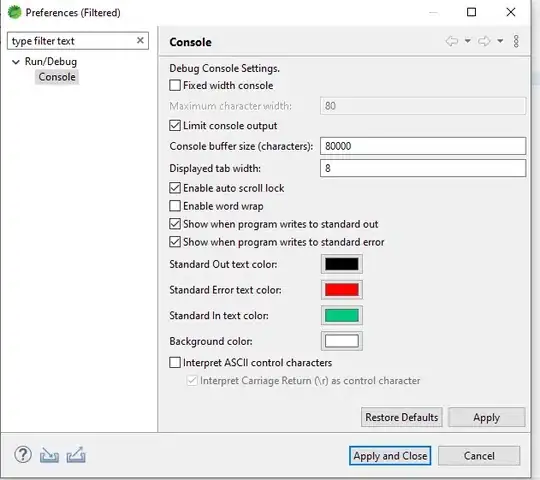

But the result remains PENDING:

>>> res = add.AsyncResult(r.id)

>>> res.status

'PENDING'

I've tried the official FAQ. But it did not help.

>>> celery_app.conf['CELERY_IGNORE_RESULT']

False

What did I do wrong? Thanks!