I did some LDA using scikit-learn's LDA function and I noticed in my resulting plots that there is a non-zero correlation between LDs.

from sklearn.lda import LDA

sklearn_lda = LDA(n_components=2)

transf_lda = sklearn_lda.fit_transform(X, y)

This is very concerning, so I went back and used the Iris data set as reference. I also found in the scikit documentation the same non-zero correlation LDA plot, which I could reproduce.

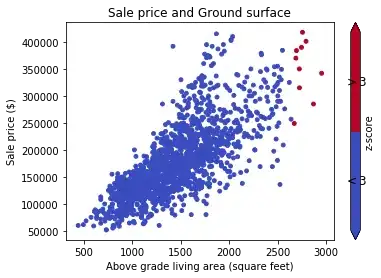

Anyway, to give you an overview how it looks like

- Plot in the upper left: there is clearly something wrong here

- Plot in the lower left: This is on raw data, not a correct approach, but one attempt to replicate scikit's resuls

- Plots in the upper right and lower right: this is how it should actually look like.

I have put the code into an IPython notebook if you want to take a look at it and try it yourself.

The scikit-documentation that is consistent with the (wrong) result in the upper-left: http://scikit-learn.org/stable/auto_examples/decomposition/plot_pca_vs_lda.html

The LDA in R, which is shown in the lower right: http://tgmstat.wordpress.com/2014/01/15/computing-and-visualizing-lda-in-r/