What you're trying to do is create a very simple web scraper (that is, you want to find all the links in the file, and download them, but you don't want to do so recursively, or do any fancy filtering or postprocessing, etc.).

You could do this by using a full-on web scraper library like scrapy and just restricting it to a depth of 1 and not enabling anything else.

Or you could do it manually. Pick your favorite HTML parser (BeautifulSoup is easy to use; html.parser is built into the stdlib; there are dozens of other choices). Download the page, then parse the resulting file, scan it for img, a, script, etc. tags with URLs, then download those URLs as well, and you're done.

If you want this all to be stored in a single file, there are a number of "web archive file" formats that exist, and different browsers (and other tools) support different ones. The basic idea of most of them is that you create a zipfile with the files in some specific layout and some extension like .webarch instead of .zip. That part's easy. But you also need to change all the absolute links to be relative links, which is a little harder. Still, it's not that hard with a tool like BeautifulSoup or html.parser or lxml.

As a side note, if you're not actually using the UrlOpener for anything, you're making life harder for yourself for no good reason; just use urlopen. Also, as the docs mention, you should be using urllib2, not urllib; in fact urllib.urlopen is deprecated as of 2.6. And, even if you do need to use an explicit opener, as the docs say, "Unless you need to support opening objects using schemes other than http:, ftp:, or file:, you probably want to use FancyURLopener."

Here's a simple example (enough to get you started, once you decide exactly what you do and don't want) using BeautifulSoup:

import os

import urllib2

import urlparse

import bs4

def saveUrl(url):

page = urllib2.urlopen(url).read()

with open("file.html", "wb") as f:

f.write(page)

soup = bs4.BeautifulSoup(f)

for img in soup('img'):

imgurl = img['src']

imgpath = urlparse.urlparse(imgurl).path

imgpath = 'file.html_files/' + imgpath

os.makedirs(os.path.dirname(imgpath))

img = urllib2.urlopen(imgurl)

with open(imgpath, "wb") as f:

f.write(img)

saveUrl("http://emma-watson.net")

This code won't work if there are any images with relative links. To handle that, you need to call urlparse.urljoin to attach a base URL. And, since the base URL can be set in various different ways, if you want to handle every page anyone will ever write, you will need to read up on the documentation and write the appropriate code. It's at this point that you should start looking at something like scrapy. But, if you just want to handle a few sites, just writing something that works for those sites is fine.

Meanwhile, if any of the images are loaded by JavaScript after page-load time—which is pretty common on modern websites—nothing will work, short of actually running that JavaScript code. At that point, you probably want a browser automation tool like Selenium or a browser simulator tool like Mechanize+PhantomJS, not a scraper.

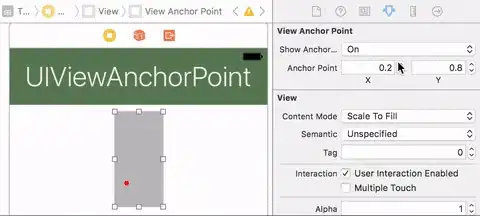

The screen of the opened file on my disk:

The screen of the opened file on my disk: