I have a program with three pools of structs. For each of them I use a list a of used structs and another one for the unused structs. During the execution the program consumes structs, and returns them back to the pool on demand. Also, there is a garbage collector to clean the "zombie" structs and return them to the pool.

At the beginning of the execution, the virtual memory, as expected, shows around 10GB* of memory allocated, and as the program uses the pool, the RSS memory increases.

Although the used nodes are back in the pool, marked as unused nodes, the RSS memory do not decreases. I expect this, because the OS doesn't know about what I'm doing with the memory, is not able to notice if I'm doing a real use of them or managing a pool.

What I would like to do is to force the unused memory to go back to virtual memory whenever I want, for example, when the RSS memory increases above X GB.

Is there any way to mark, given the memory pointer, a memory area to put it in virtual memory? I know this is the Operating System responsability but maybe there is a way to force it.

Maybe I shouldn't care about this, what do you think?

Thanks in advance.

- Note 1: This program is used in High Performance Computing, that's why it's using this amount of memory.

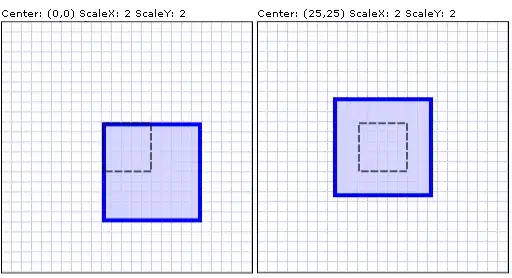

I provide a picture of the pool usage vs the memory usage, for a few files. As you can see, the sudden drops in the pool usage are due to the garbage collector, what I would like to see, is this drop reflected in the memory usage.