I have a stereo camera system and correctly calibrate it using both, cv::calibrateCamera and cv::stereoCalibrate. My reprojection error seems to be okay:

- Cam0: 0.401427

- Cam1: 0.388200

- Stereo: 0.399642

I check my calibration by calling cv::stereoRectify and transforming my images using cv::initUndistortRectifyMap and cv::remap. The result is shown below (Something strange I noticed is when displaying the rectified images there are usually artifacts in form of a deformed copy of the original image on one or sometimes even both images):

I also correctly estimate the position of my markers in pixel coordinates using cv::findContours on a thresholded HSV image.

Unfortunately, when I now try to cv::triangulatePoints my results are very poor compared to my estimated coordinates, especially in x-direction:

P1 = { 58 (±1), 150 (±1), -90xx (±2xxx) } (bottom)

P2 = { 115 (±1), -20 (±1), -90xx (±2xxx) } (right)

P3 = { 1155 (±6), 575 (±3), 60xxx (±20xxx) } (top-left)

Those are the results in mm in camera coordinates. Both cameras are positioned approximately 550 mm away from the checkerboard and the square size is 13 mm. Apparently, my results are not even close to what I expect (negative and huge z-coordinates).

So my questions are:

- I followed the

stereo_calib.cppsample quite closely and I seem to at least visually obtain good result (see reprojection error). Why are my triangulation results so poor? - How can I transform my results to the real-world coordinate system so I can actually check my results quantitatively? Do I have to do it manually as shown over here, or is there some OpenCV functions for that matter?

Here is my code:

std::vector<std::vector<cv::Point2f> > imagePoints[2];

std::vector<std::vector<cv::Point3f> > objectPoints;

imagePoints[0].resize(s->nrFrames);

imagePoints[1].resize(s->nrFrames);

objectPoints.resize( s->nrFrames );

// [Obtain image points..]

// cv::findChessboardCorners, cv::cornerSubPix

// Calc obj points

for( int i = 0; i < s->nrFrames; i++ )

for( int j = 0; j < s->boardSize.height; j++ )

for( int k = 0; k < s->boardSize.width; k++ )

objectPoints[i].push_back( Point3f( j * s->squareSize, k * s->squareSize, 0 ) );

// Mono calibration

cv::Mat cameraMatrix[2], distCoeffs[2];

cameraMatrix[0] = cv::Mat::eye(3, 3, CV_64F);

cameraMatrix[1] = cv::Mat::eye(3, 3, CV_64F);

std::vector<cv::Mat> tmp0, tmp1;

double err0 = cv::calibrateCamera( objectPoints, imagePoints[0], cv::Size( 656, 492 ),

cameraMatrix[0], distCoeffs[0], tmp0, tmp1,

CV_CALIB_FIX_PRINCIPAL_POINT + CV_CALIB_FIX_ASPECT_RATIO );

std::cout << "Cam0 reproj err: " << err0 << std::endl;

double err1 = cv::calibrateCamera( objectPoints, imagePoints[1], cv::Size( 656, 492 ),

cameraMatrix[1], distCoeffs[1], tmp0, tmp1,

CV_CALIB_FIX_PRINCIPAL_POINT + CV_CALIB_FIX_ASPECT_RATIO );

std::cout << "Cam1 reproj err: " << err1 << std::endl;

// Stereo calibration

cv::Mat R, T, E, F;

double err2 = cv::stereoCalibrate(objectPoints, imagePoints[0], imagePoints[1],

cameraMatrix[0], distCoeffs[0], cameraMatrix[1], distCoeffs[1],

cv::Size( 656, 492 ), R, T, E, F,

cv::TermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, 1e-5),

CV_CALIB_USE_INTRINSIC_GUESS + // because of mono calibration

CV_CALIB_SAME_FOCAL_LENGTH +

CV_CALIB_RATIONAL_MODEL +

CV_CALIB_FIX_K3 + CV_CALIB_FIX_K4 + CV_CALIB_FIX_K5);

std::cout << "Stereo reproj err: " << err2 << std::endl;

// StereoRectify

cv::Mat R0, R1, P0, P1, Q;

Rect validRoi[2];

cv::stereoRectify( cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

cv::Size( 656, 492 ), R, T, R0, R1, P0, P1, Q,

CALIB_ZERO_DISPARITY, 1, cv::Size( 656, 492 ), &validRoi[0], &validRoi[1]);

// [Track marker..]

// cv::cvtColor, cv::inRange, cv::morphologyEx, cv::findContours, cv::fitEllipse, *calc ellipsoid centers*

// Triangulation

unsigned int N = centers[0].size();

cv::Mat pnts3D;

cv::triangulatePoints( P0, P1, centers[0], centers[1], pnts3D );

cv::Mat t = pnts3D.t();

cv::Mat pnts3DT = cv::Mat( N, 1, CV_32FC4, t.data );

cv::Mat resultPoints;

cv::convertPointsFromHomogeneous( pnts3DT, resultPoints );

Finally, resultPoints is supposed to contain column vectors of my 3D positions in camera coordinates.

Edit: I removed some unnecessary conversions to shorten the code

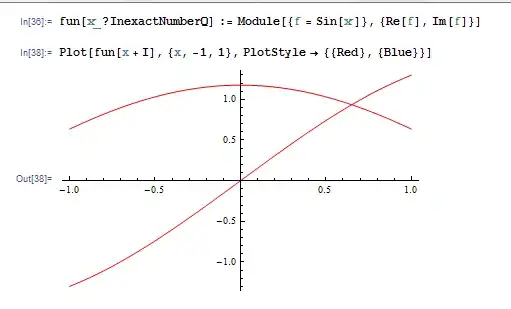

Edit2: The results I get using @marols suggested solution for triangulation

P1 = { 111, 47, 526 } (bottom-right)

P2 = { -2, 2, 577 } (left)

P3 = { 64, -46, 616 } (top)