I'm having a really weird problem here with NSUInteger in iOS7,

everything is perfect before iOS7, I guess it's related to the 64-bit support in iOS7.

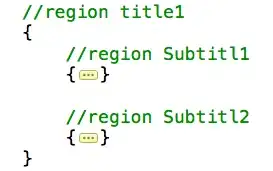

My code is like this, very simple:

if (blah blah blah) {

NSUInteger firstRow = 0;

firstRow = ([self.types containsObject:self.selectedMajorType] ?

[self.types indexOfObject:self.selectedMajorType] + 1 : 0);

...

}

According to my console,

[self.types containsObject:self.selectedMajorType] is true

[self.types indexOfObject:self.selectedMajorType]+1 is 1,

no doubt, and indexOfObject also returns an NSUInteger (according to Apple's document),

here's the screenshot:

but firstRow is always fking **0

but firstRow is always fking **0

This is so creepy I don't know what's going on with NSUInteger,

can someone help me? Thanks a lot!!

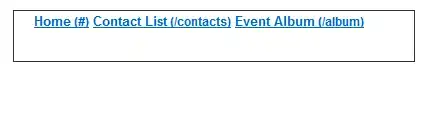

____new finding____

I guess this is the problem? It's weird..