I need a way of storing sets of arbitrary size for fast query later on. I'll be needing to query the resulting data structure for subsets or sets that are already stored.

=== Later edit: To clarify, an accepted answer to this question would be a link to a study that proposes a solution to this problem. I'm not expecting for people to develop the algorithm themselves. I've been looking over the tuple clustering algorithm found here, but it's not exactly what I want since from what I understand it 'clusters' the tuples into more simple, discrete/aproximate forms and loses the original tuples.

Now, an even simpler example:

[alpha, beta, gamma, delta] [alpha, epsilon, delta] [gamma, niu, omega] [omega, beta]

Query:

[alpha, delta]

Result:

[alpha, beta, gama, delta] [alpha, epsilon, delta]

So the set elements are just that, unique, unrelated elements. Forget about types and values. The elements can be tested among them for equality and that's it. I'm looking for an established algorithm (which probably has a name and a scientific paper on it) more than just creating one now, on the spot.

== Original examples:

For example, say the database contains these sets

[A1, B1, C1, D1], [A2, B2, C1], [A3, D3], [A1, D3, C1]

If I use [A1, C1] as a query, these two sets should be returned as a result:

[A1, B1, C1, D1], [A1, D3, C1]

Example 2:

Database:

[Gasoline amount: 5L, Distance to Berlin: 240km, car paint: red]

[Distance to Berlin: 240km, car paint: blue, number of car seats: 2]

[number of car seats: 2, Gasoline amount: 2L]

Query:

[Distance to berlin: 240km]

Result

[Gasoline amount: 5L, Distance to Berlin: 240km, car paint: red]

[Distance to Berlin: 240km, car paint: blue, number of car seats: 2]

There can be an unlimited number of 'fields' such as Gasoline amount. A solution would probably involve the database grouping and linking sets having common states (such as Gasoline amount: 240) in such a way that the query is as efficient as possible.

What algorithms are there for such needs?

I am hoping there is already an established solution to this problem instead of just trying to find my own on the spot, which might not be as efficient as one tested and improved upon by other people over time.

Clarifications:

- If it helps answer the question, I'm intending on using them for storing states: Simple example: [Has milk, Doesn't have eggs, Has Sugar]

- I'm thinking such a requirement might require graphs or multidimensional arrays, but I'm not sure

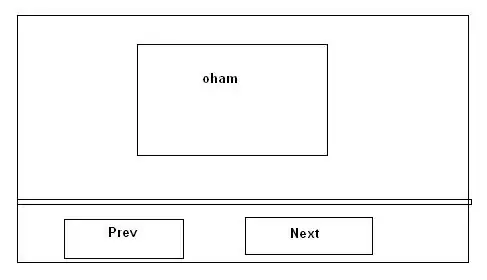

Conclusion I've implemented the two algorithms proposed in the answers, that is Set-Trie and Inverted Index and did some rudimentary profiling on them. Illustrated below is the duration of a query for a given set for each algorithm. Both algorithms worked on the same randomly generated data set consisting of sets of integers. The algorithms seem equivalent (or almost) performance wise: