I'm seeing very poor performance when fetching multiple keys from Memcache using ndb.get_multi() in App Engine (Python).

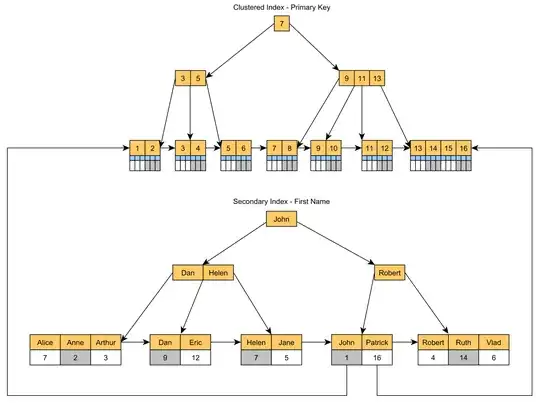

I am fetching ~500 small objects, all of which are in memcache. If I do this using ndb.get_multi(keys), it takes 1500ms or more. Here is typical output from App Stats:

and

and

As you can see, all the data is served from memcache. Most of the time is reported as being outside of RPC calls. However, my code is about as minimal as you can get, so if the time is spent on CPU it must be somewhere inside ndb:

# Get set of keys for items. This runs very quickly.

item_keys = memcache.get(items_memcache_key)

# Get ~500 small items from memcache. This is very slow (~1500ms).

items = ndb.get_multi(item_keys)

The first memcache.get you see in App Stats is the single fetch to get a set of keys. The second memcache.get is the ndb.get_multi call.

The items I am fetching are super-simple:

class Item(ndb.Model):

name = ndb.StringProperty(indexed=False)

image_url = ndb.StringProperty(indexed=False)

image_width = ndb.IntegerProperty(indexed=False)

image_height = ndb.IntegerProperty(indexed=False)

Is this some kind of known ndb performance issue? Something to do with deserialization cost? Or is it a memcache issue?

I found that if instead of fetching 500 objects, I instead aggregate all the data into a single blob, my function runs in 20ms instead of >1500ms:

# Get set of keys for items. This runs very quickly.

item_keys = memcache.get(items_memcache_key)

# Get individual item data.

# If we get all the data from memcache as a single blob it is very fast (~20ms).

item_data = memcache.get(items_data_key)

if not item_data:

items = ndb.get_multi(item_keys)

flat_data = json.dumps([{'name': item.name} for item in items])

memcache.add(items_data_key, flat_data)

This is interesting, but isn't really a solution for me since the set of items I need to fetch isn't static.

Is the performance I'm seeing typical/expected? All these measurements are on the default App Engine production config (F1 instance, shared memcache). Is it deserialization cost? Or due to fetching multiple keys from memcache maybe? I don't think the issue is instance ramp-up time. I profiled the code line by line using time.clock() calls and I see roughly similar numbers (3x faster than what I see in AppStats, but still very slow). Here's a typical profile:

# Fetch keys: 20 ms

# ndb.get_multi: 500 ms

# Number of keys is 521, fetch time per key is 0.96 ms

Update: Out of interest I also profiled this with all the app engine performance settings increased to maximum (F4 instance, 2400Mhz, dedicated memcache). The performance wasn't much better. On the faster instance the App Stats timings now match my time.clock() profile (so 500ms to fetch 500 small objects instead of 1500ms). However, it seem seems extremely slow.