I have compiled numpy 1.6.2 and scipy with MKL hoping to have a better performance. Currently I have a code that relies heavily on np.einsum(), and I was told that einsum is not good with MKL, because there is almost none vectorization. =( So I was thinking to re write some of my code with np.dot() and slicing, just to be able to get some multi-core speed up. I really like the simplicity of np.einsum() and the readability is good to. Anyway, for example, I have a multi-dimensional matrix multiplication of the form:

np.einsum('mi,mnijqk->njqk',A,B)

So how do I transform something like this, or others 3,4 and 5 dimensional array multiplications in np.dot() efficient MKL operations?

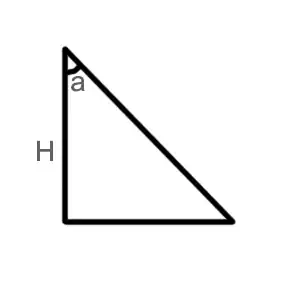

I will ad more info: I am computing this equation:

For doing this, I am using the code:

np.einsum('mn,mni,nij,nik,mi->njk',a,np.exp(b[:,:,np.newaxis]*U[np.newaxis,:,:]),P,P,X)

That is not that fast, same thing coded in cython is 5x times faster:

#STACKOVERFLOW QUESTION:

from __future__ import division

import numpy as np

cimport numpy as np

cimport cython

cdef extern from "math.h":

double exp(double x)

DTYPE = np.float

ctypedef np.float_t DTYPE_t

@cython.boundscheck(False) # turn of bounds-checking for entire function

def cython_DX_h(np.ndarray[DTYPE_t, ndim=3] P, np.ndarray[DTYPE_t, ndim=1] a, np.ndarray[DTYPE_t, ndim=1] b, np.ndarray[DTYPE_t, ndim=2] U, np.ndarray[DTYPE_t, ndim=2] X, int I, int M):

assert P.dtype == DTYPE and a.dtype == DTYPE and b.dtype == DTYPE and U.dtype == DTYPE and X.dtype == DTYPE

cdef np.ndarray[DTYPE_t,ndim=3] DX_h=np.zeros((N,I,I),dtype=DTYPE)

cdef unsigned int j,n,k,m,i

for n in range(N):

for j in range(I):

for k in range(I):

aux=0

for m in range(N):

for i in range(I):

aux+=a[m,n]*exp(b[m,n]*U[n,i])*P[n,i,j]*P[n,i,k]*X[m,i]

DX_h[n,j,k]=aux

return DX_h

Is there a way to do this in pure python with the performance of cython? (I havent been able to figure out how to tensordot this equation) Haven't been able to do prange in this cython code, lots gil and nogil errors.