I would turn the problem around and say that we are looking for headlights

ABOVE a certain line rather than saying that the headlights are below a certain line i.e. the horizon,

Your images have a very high reflection onto the tarmac and we can use that to our advantage. We know that the maximum amount of light in the image is somewhere around the reflection and headlights. We therefore look for the row with the maximum light and use that as our floor. Then look for headlights above this floor.

The idea here is that we look at the profile of the intensities on a row-by-row basis and finding the row with the maximum value.

This will only work with dark images (i.e. night) and where the reflection of the headlights onto the tarmac is large.

It will NOT work with images taking in daylight.

I have written this in Python and OpenCV but I'm sure you can translate it to a language of your choice.

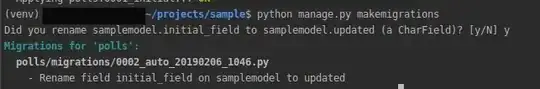

import matplotlib.pylab as pl

import cv2

# Load the image

im = cv2.imread('headlights_at_night2.jpg')

# Convert to grey.

grey_image = cv2.cvtColor(im, cv2.COLOR_BGR2GRAY)

Smooth the image heavily to mask out any local peaks or valleys

We are trying to smooth the headlights and the reflection so that there will be a nice peak. Ideally, the headlights and the reflection would merge into one area

grey_image = cv2.blur(grey_image, (15,15))

Sum the intensities row-by-row

intensity_profile = []

for r in range(0, grey_image.shape[0]):

intensity_profile.append(pl.sum(grey_image[r,:]))

Smooth the profile and convert it to a numpy array for easy handling of the data

window = 10

weights = pl.repeat(1.0, window)/window

profile = pl.convolve(pl.asarray(intensity_profile), weights, 'same')

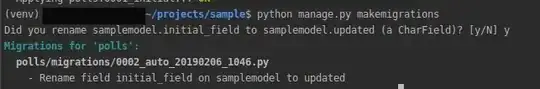

Find the maximum value of the profile. That represents the y coordinate of the headlights and the reflection area. The heat map on the left show you the distribution. The right graph shows you the total intensity value per row.

We can clearly see that the sum of the intensities has a peak.The y-coordinate is 371 and indicated by a red dot in the heat map and a red dashed line in the graph.

max_value = profile.max()

max_value_location = pl.where(profile==max_value)[0]

horizon = max_value_location

The blue curve in the right-most figure represents the variable profile

The row where we find the maximum value is our floor. We then know that the headlights are above that line. We also know that most of the upper part of the image will be that of the sky and therefore dark.

I display the result below.

I know that the line in both images are on almost the same coordinates but I think that is just a coincidence.