I have problems with calibrating two cameras: first is rgb and second is infrared. They have different resolution (I resized and croped bigger image), focal length, etc...

Examples:

RGB 1920x1080

Infrared 512x424

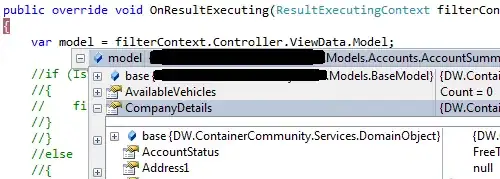

How to calibrate them to each other? What parameters should I use in stereoCalibrate. Default sample stereo_calib.cpp produce very big error. Something like this: https://www.dropbox.com/s/x57rrzp1ejm3cac/%D0%A1%D0%BA%D1%80%D0%B8%D0%BD%D1%88%D0%BE%D1%82%202014-04-05%2012.54.17.png

done with RMS error=4.1026

average reprojection err = 10.2601

UPDATE

I generated calibration parameters for each cameras independently using calibration.cpp example. For RGB camera I first resize and crop image to resolution matches IR camera (512x424), then calibrate. For RGB camera I get camera.yml, for IR camera I get camera_ir.yml. Then I try make stereo calibration using modified stereo_calib.cpp example. Before call stereoCalibrate I read camera_matrix and distortion_coefficients params for cameras from files, and put these matrices into stereoCalibrate.

FileStorage rgbCamSettings("camera.yml", CV_STORAGE_READ);

Mat rgbCameraMatrix;

Mat rgbDistCoeffs;

rgbCamSettings["camera_matrix"] >> rgbCameraMatrix;

rgbCamSettings["distortion_coefficients"] >> rgbDistCoeffs;

FileStorage irCamSettings("camera_ir.yml", CV_STORAGE_READ);

Mat irCameraMatrix;

Mat irDistCoeffs;

irCamSettings["camera_matrix"] >> irCameraMatrix;

irCamSettings["distortion_coefficients"] >> irDistCoeffs;

Mat cameraMatrix[2], distCoeffs[2];

cameraMatrix[0] = rgbCameraMatrix;

cameraMatrix[1] = irCameraMatrix;

distCoeffs[0] = rgbDistCoeffs;

distCoeffs[1] = irDistCoeffs;

Mat R, T, E, F;

double rms = stereoCalibrate(objectPoints, imagePoints[0], imagePoints[1],

cameraMatrix[0], distCoeffs[0],

cameraMatrix[1], distCoeffs[1],

imageSize, R, T, E, F,

TermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 50, 1e-6),

CV_CALIB_FIX_INTRINSIC +

CV_CALIB_USE_INTRINSIC_GUESS

);