I am not able to find anything about gradient ascent. Any good link about gradient ascent demonstrating how it is different from gradient descent would help.

-

Gradient descent is like dropping a marble into an oddly shaped bowl, wheras gradient ascent is releasing a lighter than air balloon inside an oddly shaped dome-tent. The difference is only in where marble/balloon is nudged, and where it ultimately stops moving. Here is a working example of gradient descent written in GNU Octave: https://github.com/schneems/Octave/blob/master/mlclass-ex4/mlclass-ex4/fmincg.m – Eric Leschinski Apr 26 '21 at 13:40

-

Gradient descent solves a minimization problem. Change the sign, make it a maximization problem, and now you're using gradient ascent. – duffymo Apr 26 '21 at 13:52

-

Gradient descent is an iterative operation that creates the shape of your function (like a surface) and moves the positions of all input variables until the model converges on the optimum answer. "The Gradient" is "the set of all partial derivatives describing the slope of the surface against the current point". A blind man can climb a mountain if he "Takes a step up" until you can't anymore. Pursue a masters degree in CS and ML and this will be coursework. – Eric Leschinski Apr 26 '21 at 14:25

6 Answers

It is not different. Gradient ascent is just the process of maximizing, instead of minimizing, a loss function. Everything else is entirely the same. Ascent for some loss function, you could say, is like gradient descent on the negative of that loss function.

- 66,182

- 23

- 141

- 173

-

7Basically in gradient descend you're minimizing errors whereas in gradient ascend you're maximizing profit – Kiril Stanoev May 17 '17 at 16:31

-

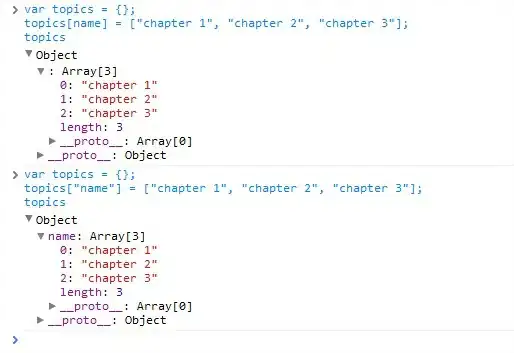

Typically, you'd use gradient ascent to maximize a likelihood function, and gradient descent to minimize a cost function. Both gradient descent and ascent are practically the same. Let me give you an concrete example using a simple gradient-based optimization friendly algorithm with a concav/convex likelihood/cost function: logistic regression.

Unfortunately, SO still doesn't seem to support LaTeX, so let me post a few screenshots.

The likelihood function that you want to maximize in logistic regression is

where "phi" is simply the sigmoid function

Now, you want to a concav funcion for gradient ascent, thus take the log:

Similarly, you can just write it as its inverse to get the cost function that you can minimize via gradient descent.

For the log-likelihood, you'd derive and apply the gradient ascent as follows:

Since you'd want to update all weights simultaneously, let's write it as

Now, it should be quite obvious to see that the gradient descent update is the same as the gradient ascent one, only keep in mind that we are formulating it as "taking a step into the opposite direction of the gradient of the cost function"

Hope that answers your question!

-

I have asked something related to this here: http://stats.stackexchange.com/questions/261692/gradient-descent-for-logistic-regression-with-or-without-f-prime – VansFannel Feb 14 '17 at 08:34

Gradient Descent is used to minimize a particular function whereas gradient ascent is used to maximize a function.

Check this out http://pandamatak.com/people/anand/771/html/node33.html

- 146,994

- 96

- 417

- 335

- 131

- 1

- 11

gradient ascent is maximizing of the function so as to achieve better optimization used in reinforcement learning it gives upward slope or increasing graph.

gradient descent is minimizing the cost function used in linear regression it provides a downward or decreasing slope of cost function.

- 41

- 1

If you want to minimize a function, we use Gradient Descent. For eg. in Deep learning we want to minimize the loss function hence we use Gradient Descent.

If you want to maximize a function, we use Gradient Ascent. For eg. in Reinforcement Learning - Policy Gradient methods our goal is to maximize the reward/expected return function hence we use Gradient Ascent.

- 4,711

- 6

- 20

- 22

Gradient is another word for slope. The positive gradient of the graph at a point (x,y) means that the graph slopes upwards at a point (x,y). On the other hand, the negative gradient of the graph at a point (x,y) means that the graph slopes downwards at a point (x,y).

Gradient descent is an iterative algorithm which is used to find a set of theta that minimizes the value of a cost function. Therefore, gradient ascent would produce a set of theta that maximizes the value of a cost function.

- 62

- 11