I'm running R version 3.0.2 in RStudio and Excel 2011 for Mac OS X. I'm performing a quantile normalization between 4 sets of 45,015 values. Yes I do know about the bioconductor package, but my question is a lot more general. It could be any other computation. The thing is, when I perform the computation (1) "by hand" in Excel and (2) with a program I wrote from scratch in R, I get highly similar, yet not identical results. Typically, the values obtained with (1) and (2) would differ by less than 1.0%, although sometimes more.

Where is this variation likely to come from, and what should I be aware of concerning number approximations in R and/or Excel? Does this come from a lack of float accuracy in either one of these programs? How can I avoid this?

[EDIT] As was suggested to me in the comments, this may be case-specific. To provide some context, I described methods (1) and (2) below in detail using test data with 9 rows. The four data sets are called A, B, C, D.

[POST-EDIT COMMENT] When I perform this on a very small data set (test sample: 9 rows), the results in R and Excel do not differ. But when I apply the same code to the real data (45,015 rows), I get slight variation between R and Excel. I have no clue why that may be.

(2) R code:

dataframe A

Aindex A

1 2.1675e+05

2 9.2225e+03

3 2.7925e+01

4 7.5775e+02

5 8.0375e+00

6 1.3000e+03

7 8.0575e+00

8 1.5700e+02

9 8.1275e+01

dataframe B

Bindex B

1 215250.000

2 10090.000

3 17.125

4 750.500

5 8.605

6 1260.000

7 7.520

8 190.250

9 67.350

dataframe C

Cindex C

1 2.0650e+05

2 9.5625e+03

3 2.1850e+01

4 1.2083e+02

5 9.7400e+00

6 1.3675e+03

7 9.9325e+00

8 1.9675e+02

9 7.4175e+01

dataframe D

Dindex D

1 207500.0000

2 9927.5000

3 16.1250

4 820.2500

5 10.3025

6 1400.0000

7 120.0100

8 175.2500

9 76.8250

Code:

#re-order by ascending values

A <- A[order(A$A),, drop=FALSE]

B <- B[order(B$B),, drop=FALSE]

C <- C[order(C$C),, drop=FALSE]

D <- D[order(D$D),, drop=FALSE]

row.names(A) <- NULL

row.names(B) <- NULL

row.names(C) <- NULL

row.names(D) <- NULL

#compute average

qnorm <- data.frame(cbind(A$A,B$B,C$C,D$D))

colnames(qnorm) <- c("A","B","C","D")

qnorm$qnorm <- (qnorm$A+qnorm$B+qnorm$C+qnorm$D)/4

#replace original values by average values

A$A <- qnorm$qnorm

B$B <- qnorm$qnorm

C$C <- qnorm$qnorm

D$D <- qnorm$qnorm

#re-order by index number

A <- A[order(A$Aindex),,drop=FALSE]

B <- B[order(B$Bindex),,drop=FALSE]

C <- C[order(C$Cindex),,drop=FALSE]

D <- D[order(D$Dindex),,drop=FALSE]

row.names(A) <- NULL

row.names(B) <- NULL

row.names(C) <- NULL

row.names(D) <- NULL

(1) Excel

- assign index numbers to each set.

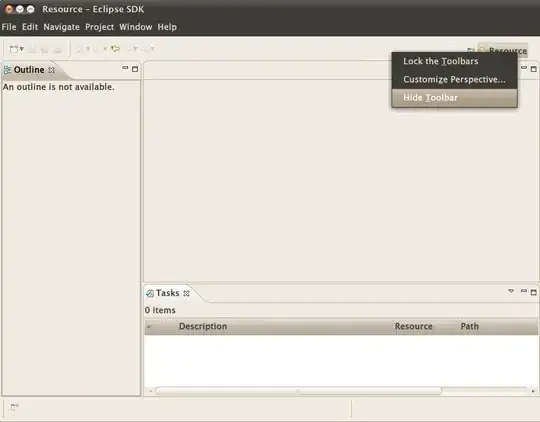

- re-order each set in ascending order: select the columns two by two and use

Custom Sort...by A, B, C, or D:

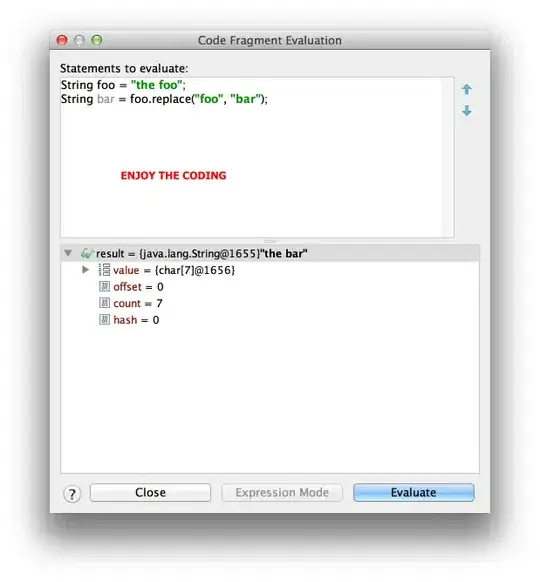

- calculate

average=()over columns A, B, C, and D:

- replace values in columns A, B, C, and D by those in the

averagecolumn usingSpecial Paste...>Values:

- re-order everything according to the original index numbers: