Problem Explaination

I am currently implementing point lights for a deferred renderer and am having trouble determining where a the heavy pixelization/triangulation that is only noticeable near the borders of lights is coming from.

The problem appears to be caused by loss of precision somewhere, but I have been unable to track down the precise source. Normals are an obvious possibility, but I have a classmate who is using directx and is handling his normals in a similar manner with no issues.

From about 2 meters away in our game's units (64 units/meter):

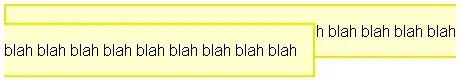

A few centimeters away. Note that the "pixelization" does not change size in the world as I approach it. However, it will appear to swim if I change the camera's orientation:

A comparison with a closeup from my forward renderer which demonstrates the spherical banding that one would expect with a RGBA8 render target (only 0-255 possible values for each color). Note that in my deferred picture the back walls exhibit normal spherical banding:

The light volume is shown here as the green wireframe:

As can be seen the effect isn't visible unless you get close to the surface (around one meter in our game's units).

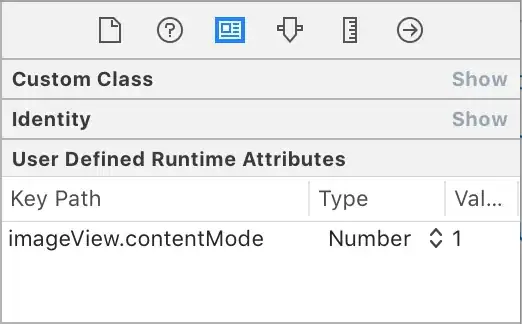

Position reconstruction

First, I should mention that I am using a spherical mesh which I am using to only render the portion of the screen that the light overlaps. I rendering only the back-faces if the depth is greater or equal the depth buffer as suggested here.

To reconstruct the camera space position of a fragment I am taking the vector from the camera space fragment on the light volume, normalizing it, and scaling it by the linear depth from my gbuffer. This is sort of a hybrid of the methods discussed here (using linear depth) and here (spherical light volumes).

Geometry Buffer

My gBuffer setup is:

enum render_targets { e_dist_32f = 0, e_diffuse_rgb8, e_norm_xyz8_specpow_a8, e_light_rgb8_specintes_a8, num_rt };

//...

GLint internal_formats[num_rt] = { GL_R32F, GL_RGBA8, GL_RGBA8, GL_RGBA8 };

GLint formats[num_rt] = { GL_RED, GL_RGBA, GL_RGBA, GL_RGBA };

GLint types[num_rt] = { GL_FLOAT, GL_FLOAT, GL_FLOAT, GL_FLOAT };

for(uint i = 0; i < num_rt; ++i)

{

glBindTexture(GL_TEXTURE_2D, _render_targets[i]);

glTexImage2D(GL_TEXTURE_2D, 0, internal_formats[i], _width, _height, 0, formats[i], types[i], nullptr);

}

// Separate non-linear depth buffer used for depth testing

glBindTexture(GL_TEXTURE_2D, _depth_tex_id);

glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT32, _width, _height, 0, GL_DEPTH_COMPONENT, GL_FLOAT, nullptr);