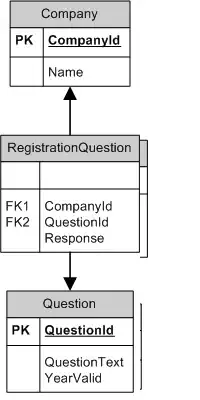

I am writing a Lights/Shadows system for my game using Java alongside the LWJGL. For each one of the Light-Emitting Entities I generate such a texture:

I should warn you that these Textures are full of (0, 0, 1) or (1, 0, 0) pixels, and the gradient effect is achieved with the alpha channel. I interpret the Alpha channel as a gradient factor.

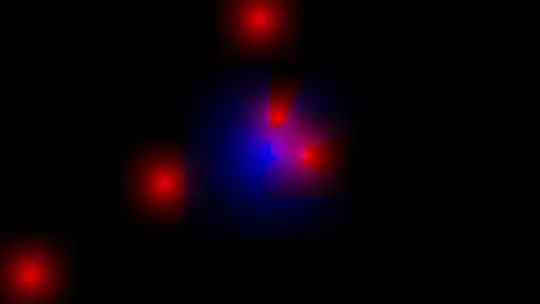

Afterwards, I wish to blend every light/shadow texture together on a single texture, each at it's respective correct position. For that, I use a Framebuffer. I tried to achieve the desired effect using the following blend equation/function combination:

glBlendEquationSeparateEXT(GL_FUNC_ADD, GL_MAX);

glBlendFuncSeparateEXT(GL_SRC_ALPHA, GL_DST_ALPHA, GL_ONE, GL_ONE);

I chose GL_ONE/GL_ONE for the Alpha Channel Blend Function arbitrarily, for GL_MAX will only do max(Sa, Da), as stated here, which means that the scaling factors are not used. The result of this combination is the following:

This image was obtained with Apple's OpenGL Driver Profiler, so I did not render it using my application (which could mess with the final result). The next step would be to render this texture over the actual game using multiply-blending, in order to darken the image, but the lights/shadows texture is obviously wrong, because we can see the edges of individual light/shadow textures over each other.

This image was obtained with Apple's OpenGL Driver Profiler, so I did not render it using my application (which could mess with the final result). The next step would be to render this texture over the actual game using multiply-blending, in order to darken the image, but the lights/shadows texture is obviously wrong, because we can see the edges of individual light/shadow textures over each other.

How should I proceed to achieve the desired result?

Edit: I forgot to explain my choices for the scaling factors: I think that it would be right to simply add the colors of each light (pondering each of them with their respective alpha values) and choose the alpha of the final fragment to be the biggest of each overlapping light.