Table products:

id int primary_key

Table transactions:

product_id int references products

The below SQL query is very slow:

SELECT products.*

FROM products

LEFT JOIN transactions

ON ( products.id = transactions.product_id )

WHERE transactions.product_id IS NULL;

Out of 100 hundred million products records, there might be only 100 records where a product has no corresponding transactions.

This query is very slow as I suspect it is doing a full table scan to find those null foreign key product records.

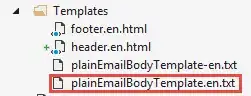

I want to create a partial index like (pseudo-code):

CREATE INDEX products_with_no_transactions_index

ON (Left JOIN TABLE

BETWEEN products AND transactions)

WHERE transactions.product_id IS NULL;

Is the above possible and how would I go about it?

Some characteristics of this data set:

Transactions are never deleted and only added.

Products are never deleted but added at a rate of 100s per minute (obviously this is a made up example behind a much more complex actual use case). A small percentage of those are temporarily orphaned.

I need to frequently query (up to once per minute) and need to always know what the current set of orphaned products are.