Scikit-learn utilizes a very convenient approach based on fit and predict methods. I have time-series data in the format suited for fit and predict.

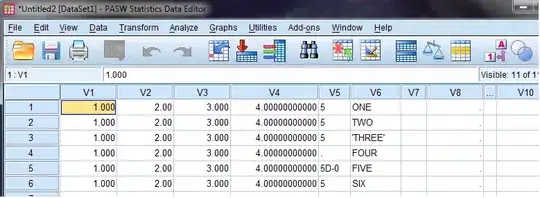

For example I have the following Xs:

[[1.0, 2.3, 4.5], [6.7, 2.7, 1.2], ..., [3.2, 4.7, 1.1]]

and the corresponding ys:

[[1.0], [2.3], ..., [7.7]]

These data have the following meaning. The values stored in ys form a time series. The values in Xs are corresponding time dependent "factors" that are known to have some influence on the values in ys (for example: temperature, humidity and atmospheric pressure).

Now, of course, I can use fit(Xs,ys). But then I get a model in which future values in ys depend only on factors and do not dependend on the previous Y values (at least directly) and this is a limitation of the model. I would like to have a model in which Y_n depends also on Y_{n-1} and Y_{n-2} and so on. For example I might want to use an exponential moving average as a model. What is the most elegant way to do it in scikit-learn

ADDED

As it has been mentioned in the comments, I can extend Xs by adding ys. But this way has some limitations. For example, if I add the last 5 values of y as 5 new columns to X, the information about time ordering of ys is lost. For example, there is no indication in X that values in the 5th column follows value in the 4th column and so on. As a model, I might want to have a linear fit of the last five ys and use the found linear function to make a prediction. But if I have 5 values in 5 columns it is not so trivial.

ADDED 2

To make my problem even more clear, I would like to give one concrete example. I would like to have a "linear" model in which y_n = c + k1*x1 + k2*x2 + k3*x3 + k4*EMOV_n, where EMOV_n is just an exponential moving average. How, can I implement this simple model in scikit-learn?