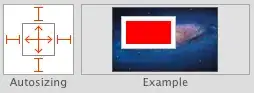

I have a big csv file (25 mb) that represents a symmetric graph (about 18kX18k). While parsing it into an array of vectors, i have analyzed the code (with VS2012 ANALYZER) and it shows that the problem with the parsing efficiency (about 19 seconds total) occurs while reading each character (getline::basic_string::operator+=) as shown in the picture below:

This leaves me frustrated, as with Java simple buffered line file reading and tokenizer i achieve it with less than half a second.

My code uses only STL library:

int allColumns = initFirstRow(file,secondRow);

// secondRow has initialized with one value

int column = 1; // dont forget, first column is 0

VertexSet* rows = new VertexSet[allColumns];

rows[1] = secondRow;

string vertexString;

long double vertexDouble;

for (int row = 1; row < allColumns; row ++){

// dont do the last row

for (; column < allColumns; column++){

//dont do the last column

getline(file,vertexString,',');

vertexDouble = stold(vertexString);

if (vertexDouble > _TH){

rows[row].add(column);

}

}

// do the last in the column

getline(file,vertexString);

vertexDouble = stold(vertexString);

if (vertexDouble > _TH){

rows[row].add(++column);

}

column = 0;

}

initLastRow(file,rows[allColumns-1],allColumns);

init first and last row basically does the same thing as the loop above, but initFirstRow also counts the number of columns.

VertexSet is basically a vector of indexes (int). Each vertex read (separated by ',') goes no more than 7 characters length long (values are between -1 and 1).