My idea is simple here. I am using mexopencv and trying to see whether there is any object present in my current that matches with any image stored in my database.I am using OpenCV DescriptorMatcher function to train my images.

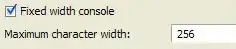

Here is a snippet, I am wishing to build on top of this, which is one to one one image matching using mexopencv, and can also be extended for image stream.

function hello

detector = cv.FeatureDetector('ORB');

extractor = cv.DescriptorExtractor('ORB');

matcher = cv.DescriptorMatcher('BruteForce-Hamming');

train = [];

for i=1:3

train(i).img = [];

train(i).points = [];

train(i).features = [];

end;

train(1).img = imread('D:\test\1.jpg');

train(2).img = imread('D:\test\2.png');

train(3).img = imread('D:\test\3.jpg');

for i=1:3

frameImage = train(i).img;

framePoints = detector.detect(frameImage);

frameFeatures = extractor.compute(frameImage , framePoints);

train(i).points = framePoints;

train(i).features = frameFeatures;

end;

for i = 1:3

boxfeatures = train(i).features;

matcher.add(boxfeatures);

end;

matcher.train();

camera = cv.VideoCapture;

pause(3);%Sometimes necessary

window = figure('KeyPressFcn',@(obj,evt)setappdata(obj,'flag',true));

setappdata(window,'flag',false);

while(true)

sceneImage = camera.read;

sceneImage = rgb2gray(sceneImage);

scenePoints = detector.detect(sceneImage);

sceneFeatures = extractor.compute(sceneImage,scenePoints);

m = matcher.match(sceneFeatures);

%{

%Comments in

img_no = m.imgIdx;

img_no = img_no(1);

%I am planning to do this based on the fact that

%on a perfect match imgIdx a 1xN will be filled

%with the index of the training

%example 1,2 or 3

objPoints = train(img_no+1).points;

boxImage = train(img_no+1).img;

ptsScene = cat(1,scenePoints([m.queryIdx]+1).pt);

ptsScene = num2cell(ptsScene,2);

ptsObj = cat(1,objPoints([m.trainIdx]+1).pt);

ptsObj = num2cell(ptsObj,2);

%This is where the problem starts here, assuming the

%above is correct , Matlab yells this at me

%index exceeds matrix dimensions.

end [H,inliers] = cv.findHomography(ptsScene,ptsObj,'Method','Ransac');

m = m(inliers);

imgMatches = cv.drawMatches(sceneImage,scenePoints,boxImage,boxPoints,m,...

'NotDrawSinglePoints',true);

imshow(imgMatches);

%Comment out

%}

flag = getappdata(window,'flag');

if isempty(flag) || flag, break; end

pause(0.0001);

end

Now the issue here is that imgIdx is a 1xN matrix , and it contains the index of different training indices, which is obvious. And only on a perfect match is the matrix imgIdx is completely filled with the matched image index. So, how do I use this matrix to pick the right image index. Also

in these two lines, I get the error of index exceeding matrix dimension.

ptsObj = cat(1,objPoints([m.trainIdx]+1).pt);

ptsObj = num2cell(ptsObj,2);

This is obvious since while debugging I saw clearly that the size of m.trainIdx is greater than objPoints, i.e I am accessing points which I should not, hence index exceeds

There is scant documentation on use of imgIdx , so anybody who has knowledge on this subject, I need help.

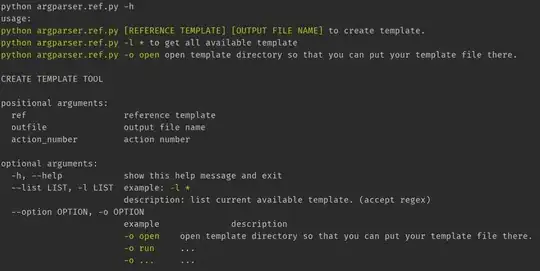

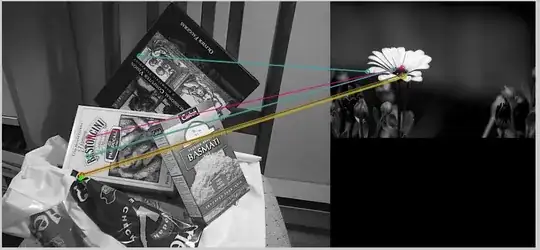

These are the images I used.

Image1

Image2

Image3

1st update after @Amro's response:

With the ratio of min distance to distance at 3.6 , I get the following response.

With the ratio of min distance to distance at 1.6 , I get the following response.