I am warning you, this could be confusing, and the code i have written is more of a mindmap than finished code..

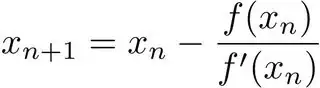

I am trying to implement the Newton-Raphson method to solve equations. What I can't figure out is how to write this

equation in Python, to calculate the next approximation (xn+1) from the last approximation (xn). I have to use a loop, to get closer and closer to the real answer, and the loop should terminate when the change between approximations is less than the variable h.

- How do I write the code for the equation?

How do I terminate the loop when the approximations are not changing anymore?

Calculates the derivative for equation f, in point x, with the accuracy h (this is used in the equation for solve())

def derivative(f, x, h): deriv = (1.0/(2*h))*(f(x+h)-f(x-h)) return derivThe numerical equation solver

Supposed to loop until the difference between approximations is less than h

def solve(f, x0, h): xn = x0 prev = 0 while ( approx - prev > h): xn = xn - (f(xn))/derivative(f, xn, h) return xn