Frankly this is a non-trivial question.

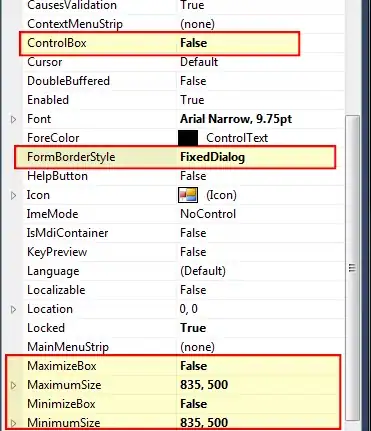

Just to list some obvious options:

- Implement one of the numerous character recognition softwares, and

get the string of characters, and then do a search for the substring

in another string.

- For images with almost no difference in zoom

level, Use edge detection filters, like canny edge detection, to

enhance the image, then use ICP (Iterative Closest Point), letting

each edge pixel provide a vector to the closest edge pixel in the

other image, with a similar value. this typically aligns images if

they are similar enough. The final score tells you how similar they

are.

- For very large zoom levels, use multiple rotation and zoom

hypothesis, and for each, scale the images and do cross correlation

of the two images. select the hypothesis, that provides the

coordinates with the best correlation, and use the point of

correlation, as the x and y offset. The value of the correlation

tells you how good a fit you have..

many other smarter algorithms have been produced for image fitting. However, you have much larger problems.

The two example images you provide does not show the entire licenseplate, so you will not be able to say anything better than, "the probabillity of a match is larger than zero", as the number of visible characters increase, so does the probabillity of a match.

you could argue that small damages to a license plate also increases the probabillity, in that case cross correlation or similar method is needed to evaluate the probabillity of a match.