I wanted to make a simple linear model (lm()) without intercept coefficient so I put -1 in my model formula as in the following example. The problem is that the R-squared return by summary(myModel) seems to be overestimated. lm(), summary() and -1 are among the very classic function/functionality in R. Hence I am a bit surprised and I wonder if this is a bug or if there is any reason for this behaviour.

Here is an example:

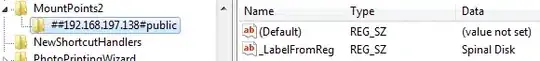

x <- rnorm(1000, 3, 1)

mydf <- data.frame(x=x, y=1+x+rnorm(1000, 0, 1))

plot(y ~ x, mydf, xlim=c(-2, 10), ylim=c(-2, 10))

mylm1 <- lm(y ~ x, mydf)

mylm2 <- lm(y ~ x - 1, mydf)

abline(mylm1, col="blue") ; abline(mylm2, col="red")

abline(h=0, lty=2) ; abline(v=0, lty=2)

r2.1 <- 1 - var(residuals(mylm1))/var(mydf$y)

r2.2 <- 1 - var(residuals(mylm2))/var(mydf$y)

r2 <- c(paste0("Intercept - r2: ", format(summary(mylm1)$r.squared, digits=4)),

paste0("Intercept - manual r2: ", format(r2.1, digits=4)),

paste0("No intercept - r2: ", format(summary(mylm2)$r.squared, digits=4)),

paste0("No intercept - manual r2: ", format(r2.2, digits=4)))

legend('bottomright', legend=r2, col=c(4,4,2,2), lty=1, cex=0.6)