First, a paper you should consider reading, if you want to understand floating point foibles better: "What Every Computer Scientist Should Know About Floating Point Arithmetic," http://www.validlab.com/goldberg/paper.pdf

And now to some meat.

The following code is bare bones, and attempts to produce an IEEE-754 single precision float from an unsigned int in the range 0 < value < 224. That's the format you're most likely to encounter on modern hardware, and it's the format you seem to reference in your original question.

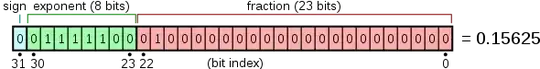

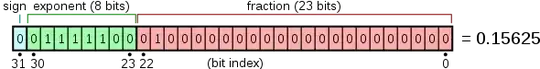

IEEE-754 single-precision floats are divided into three fields: A single sign bit, 8 bits of exponent, and 23 bits of significand (sometimes called a mantissa). IEEE-754 uses a hidden 1 significand, meaning that the significand is actually 24 bits total. The bits are packed left to right, with the sign bit in bit 31, exponent in bits 30 .. 23, and the significand in bits 22 .. 0. The following diagram from Wikipedia illustrates:

The exponent has a bias of 127, meaning that the actual exponent associated with the floating point number is 127 less than the value stored in the exponent field. An exponent of 0 therefore would be encoded as 127.

(Note: The full Wikipedia article may be interesting to you. Ref: http://en.wikipedia.org/wiki/Single_precision_floating-point_format )

Therefore, the IEEE-754 number 0x40000000 is interpreted as follows:

- Bit 31 = 0: Positive value

- Bits 30 .. 23 = 0x80: Exponent = 128 - 127 = 1 (aka. 21)

- Bits 22 .. 0 are all 0: Significand = 1.00000000_00000000_0000000. (Note I restored the hidden 1).

So the value is 1.0 x 21 = 2.0.

To convert an unsigned int in the limited range given above, then, to something in IEEE-754 format, you might use a function like the one below. It takes the following steps:

- Aligns the leading 1 of the integer to the position of the hidden 1 in the floating point representation.

- While aligning the integer, records the total number of shifts made.

- Masks away the hidden 1.

- Using the number of shifts made, computes the exponent and appends it to the number.

- Using

reinterpret_cast, converts the resulting bit-pattern to a float. This part is an ugly hack, because it uses a type-punned pointer. You could also do this by abusing a union. Some platforms provide an intrinsic operation (such as _itof) to make this reinterpretation less ugly.

There are much faster ways to do this; this one is meant to be pedagogically useful, if not super efficient:

float uint_to_float(unsigned int significand)

{

// Only support 0 < significand < 1 << 24.

if (significand == 0 || significand >= 1 << 24)

return -1.0; // or abort(); or whatever you'd like here.

int shifts = 0;

// Align the leading 1 of the significand to the hidden-1

// position. Count the number of shifts required.

while ((significand & (1 << 23)) == 0)

{

significand <<= 1;

shifts++;

}

// The number 1.0 has an exponent of 0, and would need to be

// shifted left 23 times. The number 2.0, however, has an

// exponent of 1 and needs to be shifted left only 22 times.

// Therefore, the exponent should be (23 - shifts). IEEE-754

// format requires a bias of 127, though, so the exponent field

// is given by the following expression:

unsigned int exponent = 127 + 23 - shifts;

// Now merge significand and exponent. Be sure to strip away

// the hidden 1 in the significand.

unsigned int merged = (exponent << 23) | (significand & 0x7FFFFF);

// Reinterpret as a float and return. This is an evil hack.

return *reinterpret_cast< float* >( &merged );

}

You can make this process more efficient using functions that detect the leading 1 in a number. (These sometimes go by names like clz for "count leading zeros", or norm for "normalize".)

You can also extend this to signed numbers by recording the sign, taking the absolute value of the integer, performing the steps above, and then putting the sign into bit 31 of the number.

For integers >= 224, the entire integer does not fit into the significand field of the 32-bit float format. This is why you need to "round": You lose LSBs in order to make the value fit. Thus, multiple integers will end up mapping to the same floating point pattern. The exact mapping depends on the rounding mode (round toward -Inf, round toward +Inf, round toward zero, round toward nearest even). But the fact of the matter is you can't shove 24 bits into fewer than 24 bits without some loss.

You can see this in terms of the code above. It works by aligning the leading 1 to the hidden 1 position. If a value was >= 224, the code would need to shift right, not left, and that necessarily shifts LSBs away. Rounding modes just tell you how to handle the bits shifted away.