I have a CUDA program whose kernel basically does the following.

- I provide a list of n points in cartesian coordinates e.g. (x_i,y_i) in a plane of dimension dim_x * dim_y. I invoke the kernel accordingly.

- For every point on this plane (x_p,y_p) I calculate by a formula the time it would take for each of those n points to reach there; given those n points are moving with a certain velocity.

- I order those times in increasing order t_0,t_1,...t_n where the precision of t_i is set to 1. i.e. If t'_i=2.3453 then I would only use t_i=2.3.

- Assuming the times are generated from a normal distribution I simulate the 3 quickest times to find the percentage of time those 3 points reached earliest. Hence suppose prob_0 = 0.76,prob_1=0.20 and prob_2=0.04 by a random experiment. Since t_0 reaches first most amongst the three, I also return the original index (before sorting of times) of the point. Say idx_0 = 5 (An integer).

- Hence for every point on this plane I get a pair (prob,idx).

Suppose n/2 of those points are of one kind and the rest are of other. A sample image generated looks as follows.

Especially when precision of the time was set to 1 I noticed that the number of unique 3 tuples of time (t_0,t_1,t_2) was just 2.5% of the total data points i.e. number of points on the plane. This meant that most of the times the kernel was uselessly simulating when it could just use the values from previous simulations. Hence I could use a dictionary having key as 3-tuple of times and value as index and prob. Since as far as I know and tested, STL can't be accessed inside a kernel, I constructed an array of floats of size 201000000. This choice was by experimentation since none of the top 3 times exceeded 20 seconds. Hence t_0 could take any value from {0.0,0.1,0.2,...,20.0} thus having 201 choices. I could construct a key for such a dictionary like the following

- Key = t_o * 10^6 + t_1 * 10^3 + t_2

As far as the value is concerned I could make it as (prob+idx). Since idx is an integer and 0.0<=prob<=1.0, I could retrieve both of those values later by

- prob=dict[key]-floor(dict[key])

- idx = floor(dict[key])

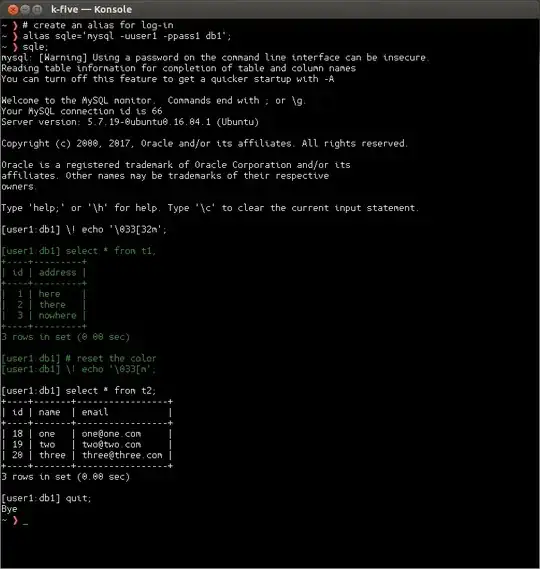

So now my kernel looks like the following

__global__ my_kernel(float* points,float* dict,float *p,float *i,size_t w,...){

unsigned int col = blockIdx.y*blockDim.y + threadIdx.y;

unsigned int row = blockIdx.x*blockDim.x + threadIdx.x;

//Calculate time taken for each of the points to reach a particular point on the plane

//Order the times in increasing order t_0,t_1,...,t_n

//Calculate Key = t_o * 10^6 + t_1 * 10^3 + t_2

if(dict[key]>0.0){

prob=dict[key]-floor(dict[key])

idx = floor(dict[key])

}

else{

//Simulate and find prob and idx

dict[key]=(prob+idx)

}

p[row*width+col]=prob;

i[row*width+col]=idx;

}

The result is quite similar to the original program for most points but for some it is wrong.

I am quite sure that this is due to race condition. Notice that dict was initialized with all zeroes. The basic idea would be to make the data structure "read many write once" in a particular location of the dict.

I am aware that there might be much more optimized ways of solving this problem rather than allocating so much memory. Please let me know in that case. But I would really like to understand why this particular solution is failing. In particular I would like to know how to use atomicAdd in this setting. I have failed to use it.