I was watching this video in which Jeff Dean talks about Latency and Scaling - https://www.youtube.com/watch?v=nK6daeTZGA8#t=515

At the 00:07:34 mark, he gives an example of latency that goes like this -

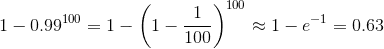

Lets say you have a bunch of servers. Their average response time to a request is 10ms. But 1% of the time they take 1sec or more to respond. So if you touch one of these servers, 1% of your requests take 1sec or more. Touch 100 of these servers, and 63% of your requests take 1sec or more.

How did he arrive at that 63% figure? what is the logic/math behind that?