UPDATE

In Xcode 10.0, there was an lldb bug that made this answer print the wrong value if the Decimal is a value in a Dictionary<String: Decimal> (and probably in other cases). See this question and answer and Swift bug report SR-8989. The bug was fixed by Xcode 11 (possibly earlier).

ORIGINAL

You can add lldb support for formatting NSDecimal (and, in Swift, Foundation.Decimal) by installing Python code that converts the raw bits of the NSDecimal to a human-readable string. This is called a type summary script and is documented under “PYTHON SCRIPTING” on this page of the lldb documentation.

One advantage of using a type summary script is that it doesn't involve running code in the target process, which can be important for certain targets.

Another advantage is that the Xcode debugger's variable view seems to work more reliably with a type summary script than with a summary format as seen in hypercrypt's answer. I had trouble with the summary format, but the type summary script works reliably.

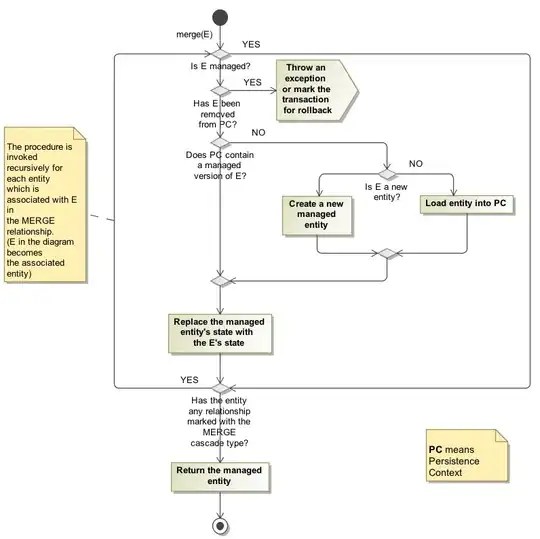

Without a type summary script (or other customization), Xcode shows an NSDecimal (or Swift Decimal) like this:

With a type summary script, Xcode shows it like this:

Setting up the type summary script involves two steps:

Save the script (shown below) in a file somewhere. I saved it in ~/.../lldb/Decimal.py.

Add a command to ~/.lldbinit to load the script. The command should look like this:

command script import ~/.../lldb/Decimal.py

Change the path to wherever you stored the script.

Here's the script. I have also saved it in this gist.

# Decimal / NSDecimal support for lldb

#

# Put this file somewhere, e.g. ~/.../lldb/Decimal.py

# Then add this line to ~/.lldbinit:

# command script import ~/.../lldb/Decimal.py

import lldb

def stringForDecimal(sbValue, internal_dict):

from decimal import Decimal, getcontext

sbData = sbValue.GetData()

if not sbData.IsValid():

raise Exception('unable to get data: ' + sbError.GetCString())

if sbData.GetByteSize() != 20:

raise Exception('expected data to be 20 bytes but found ' + repr(sbData.GetByteSize()))

sbError = lldb.SBError()

exponent = sbData.GetSignedInt8(sbError, 0)

if sbError.Fail():

raise Exception('unable to read exponent byte: ' + sbError.GetCString())

flags = sbData.GetUnsignedInt8(sbError, 1)

if sbError.Fail():

raise Exception('unable to read flags byte: ' + sbError.GetCString())

length = flags & 0xf

isNegative = (flags & 0x10) != 0

if length == 0 and isNegative:

return 'NaN'

if length == 0:

return '0'

getcontext().prec = 200

value = Decimal(0)

scale = Decimal(1)

for i in range(length):

digit = sbData.GetUnsignedInt16(sbError, 4 + 2 * i)

if sbError.Fail():

raise Exception('unable to read memory: ' + sbError.GetCString())

value += scale * Decimal(digit)

scale *= 65536

value = value.scaleb(exponent)

if isNegative:

value = -value

return str(value)

def __lldb_init_module(debugger, internal_dict):

print('registering Decimal type summaries')

debugger.HandleCommand('type summary add Foundation.Decimal -F "' + __name__ + '.stringForDecimal"')

debugger.HandleCommand('type summary add NSDecimal -F "' + __name__ + '.stringForDecimal"')