We are using a D3DImage in WPF to display partly transparent 3D content rendered using Direct3D on a background which is created in WPF. So, we have something like this:

<UserControl Background="Blue">

<Image>

<Image.Source>

<D3DImage .../>

</Image.Source>

</Image>

</UserControl>

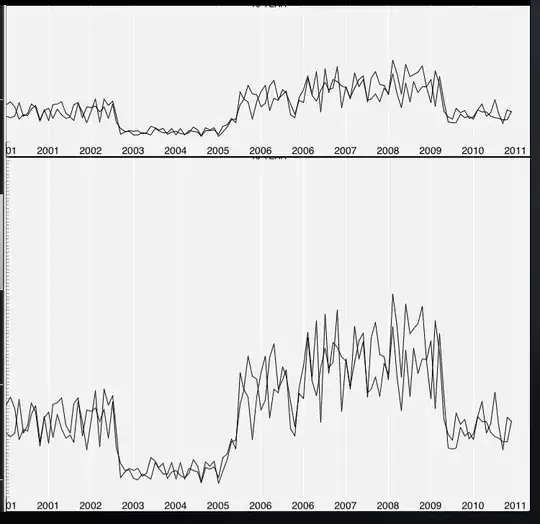

Now, we have the problem that anti-aliased edges are not displayed properly. We get some sort of white "glow" effect at some edges, similar to the effect around the text shown in the first picture:

(taken from http://dvd-hq.info/alpha_matting.php)

The reason is that the data we get from DirectX obviously is not premultiplied with the alpha channel. But WPF and the D3DImage expect this as their input (see "Introduction to D3DImage" on CodeProject, section "A Brief Look at D3D Interop Requirements").

I verified that this is actually the problem by multiplying every pixel by its alpha value in C# and using a custom pixel shader. The results were both fine. However, I would like to get this result from DirectX directly.

How can I get premultiplied data from DirectX directly? Can I somehow specify it in the call to CreateRenderTarget? I am no DirectX expert, so please excuse if the solution might be obvious...