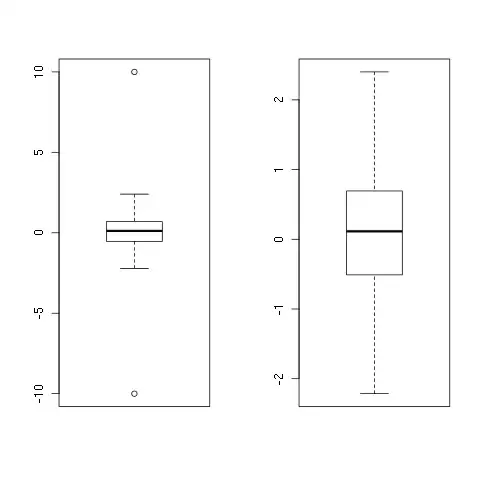

I'm observing a strange phenomenon with my OpenGL-program which is written in C#/OpenTK/core-profile. When displaying mandelbrot-data from a heightmap with ~1M vertices the performance differs dependant on the scale-value of my view-matrices (it's orthographic so yes I need scale). The data is rendered using VBO's. The render-process includes lighting and shadow-maps.

My only guess is that something in the shader "errors" on low scale values and there is some error handling. Any hints for me?

Examples: