I am trying to develop an algorithm separates instrumental notes in music files. C#, C++ DLL's used. I've spent pretty long time to achieve it. So what I've done so far is:

- Perform a specialized FFT on PCM(it gives high resolutions both in time and frequency domain)

- Filterbank calculation on FFT bins to simulate human hearing system(psychoacoustic model)

- Pattern recognition with peak detection to feed input data for some machine learning stuff(currently planning level)

In current progress, I detected peaks with simple method "picking local maximam value". Roughly, detect as peak if f(x-1) < f(x) > f(x+1) where f(x)'s frequency reponse and x is frequency index.

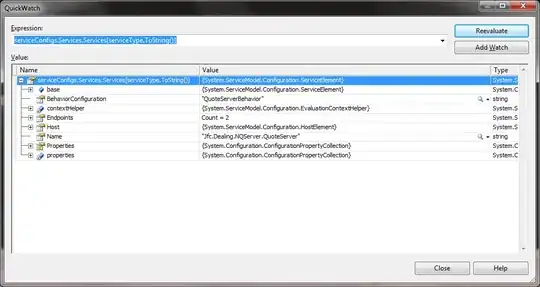

But I got some problems here. If two or more signals present close in frequency domain, this method just detect one peak and all the others are hidden. I searched the web for few days. There were something called 'peak purity', 'peak separation'. To do peak separation, there were several methods. They are actually separating peaks well. Here are few picture I've googled.

(source: chromatography-online.org)

I think method using 'deconvolution' would be the best for this situation. But I have no idea how to deconvolute my spectrum, separate peaks with deconvolution. As far as I know, deconvolution won't give me multiple components of peaks as seen in above pictures directly. And what filter functions should I use? Since I am lack of math skills, I need pseudo code level help. Glad to see any other advices :)