I am just learning C and I have a little knowledge of Objective-C due to dabbling in iOS development. In Objective-C, I was using NSLog(@"%i", x); to print the variable x to the console. However, I have been reading a few C tutorials and they are saying to use %d instead of %i.

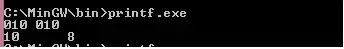

printf("%d", x);

and printf("%i", x); both print x to the console correctly.

These both seem to get me to the same place, so which is preferred? Is one more semantically correct or is right?