If performance is in question this probably is not the bottleneck, however, have you considered using the parallel library or PLINQ? see below:

Parallel.ForEach(Collection, obj =>

{

if (obj.Mandatory)

{

DoWork();

}

});

http://msdn.microsoft.com/en-us/library/dd460688(v=vs.110).aspx

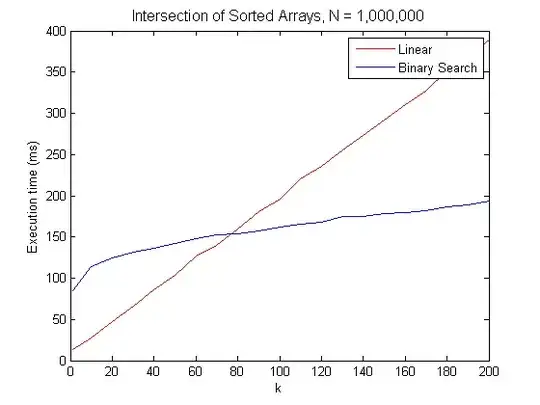

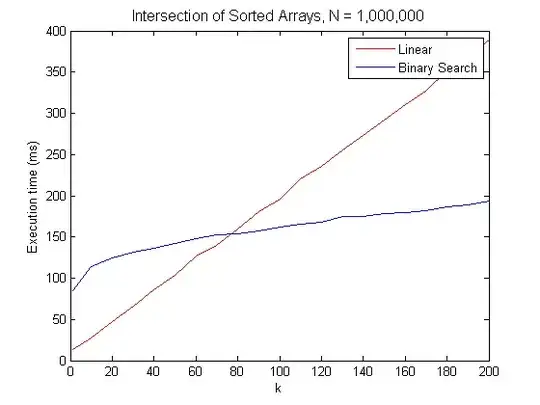

Also, although perhaps slightly unrelated it seems as though performance peeks your curiousity, if you are dealing with very large sets of data, a binary search may be useful. In my case I have two separate lists of data. I have to deal with lists of millions of records and this saved me literally an exponential amount of time per execution. The only downside is that it is ONLY useful for very large collections and is required to be sorted beforehand. You will also notice that this makes use of the ConcurrentDictionary class, which provides significant overhead, but it is thread safe and was required due to the requirements and the number of threads I am managing asynchronously.

private ConcurrentDictionary<string, string> items;

private List<string> HashedListSource { get; set; }

private List<string> HashedListTarget { get; set; }

this.HashedListTarget.Sort();

this.items.OrderBy(x => x.Value);

private void SetDifferences()

{

for (int i = 0; i < this.HashedListSource.Count; i++)

{

if (this.HashedListTarget.BinarySearch(this.HashedListSource[i]) < 0)

{

this.Mismatch.Add(items.ElementAt(i).Key);

}

}

}

This image was originally posted in a great article found here: http://letsalgorithm.blogspot.com/2012/02/intersecting-two-sorted-integer-arrays.html

This image was originally posted in a great article found here: http://letsalgorithm.blogspot.com/2012/02/intersecting-two-sorted-integer-arrays.html

Hope this helps!

This image was originally posted in a great article found here:

This image was originally posted in a great article found here: