I'm working with a given dataset using OpenCV, without any Kinect by my side. And I would like to map the given depth data to its RGB counterpart (so that I can get the actual color and the depth)

Since I'm using OpenCV and C++, and don't own a Kinect, sadly I can't utilize MapDepthFrameToColorFrame method from the official Kinect API.

From the given cameras' intrinsics and distortion coefficients, I could map the depth to world coordinates, and back to RGB based on the algorithm provided here

Vec3f depthToW( int x, int y, float depth ){

Vec3f result;

result[0] = (float) (x - depthCX) * depth / depthFX;

result[1] = (float) (y - depthCY) * depth / depthFY;

result[2] = (float) depth;

return result;

}

Vec2i wToRGB( const Vec3f & point ) {

Mat p3d( point );

p3d = extRotation * p3d + extTranslation;

float x = p3d.at<float>(0, 0);

float y = p3d.at<float>(1, 0);

float z = p3d.at<float>(2, 0);

Vec2i result;

result[0] = (int) round( (x * rgbFX / z) + rgbCX );

result[1] = (int) round( (y * rgbFY / z) + rgbCY );

return result;

}

void map( Mat& rgb, Mat& depth ) {

/* intrinsics are focal points and centers of camera */

undistort( rgb, rgb, rgbIntrinsic, rgbDistortion );

undistort( depth, depth, depthIntrinsic, depthDistortion );

Mat color = Mat( depth.size(), CV_8UC3, Scalar(0) );

ushort * raw_image_ptr;

for( int y = 0; y < depth.rows; y++ ) {

raw_image_ptr = depth.ptr<ushort>( y );

for( int x = 0; x < depth.cols; x++ ) {

if( raw_image_ptr[x] >= 2047 || raw_image_ptr[x] <= 0 )

continue;

float depth_value = depthMeters[ raw_image_ptr[x] ];

Vec3f depth_coord = depthToW( y, x, depth_value );

Vec2i rgb_coord = wToRGB( depth_coord );

color.at<Vec3b>(y, x) = rgb.at<Vec3b>(rgb_coord[0], rgb_coord[1]);

}

}

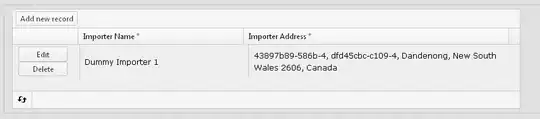

But the result seems to be misaligned. I can't manually set the translations, since the dataset is obtained from 3 different Kinects, and each of them are misaligned in different direction. You could see one of it below (Left: undistorted RGB, Middle: undistorted Depth, Right: mapped RGB to Depth)

My question is, what should I do at this point? Did I miss a step while trying to project either depth to world or world back to RGB? Can anyone who has experienced with stereo camera point out my missteps?