I wrote a small Console application to test the sizeof operator:

public class Program

{

public static unsafe void Main(string[] args)

{

// Native

Console.WriteLine("The size of bool is {0}.", sizeof(bool));

Console.WriteLine("The size of short is {0}.", sizeof(short));

Console.WriteLine("The size of int is {0}.", sizeof(int));

Console.WriteLine("The size of long is {0}.", sizeof(long));

// Custom

Console.WriteLine("The size of Bool1 is {0}.", sizeof(Bool1));

Console.WriteLine("The size of Bool2 is {0}.", sizeof(Bool2));

Console.WriteLine("The size of Bool1Int1Bool1 is {0}.", sizeof(Bool1Int1Bool1));

Console.WriteLine("The size of Bool2Int1 is {0}.", sizeof(Bool2Int1));

Console.WriteLine("The size of Bool1Long1 is {0}.", sizeof(Bool1Long1));

Console.WriteLine("The size of Bool1DateTime1 is {0}.", sizeof(Bool1DateTime1));

Console.Read();

}

}

public struct Bool1

{

private bool b1;

}

public struct Bool2

{

private bool b1;

private bool b2;

}

public struct Bool1Int1Bool1

{

private bool b1;

private int i1;

private bool b2;

}

public struct Bool2Int1

{

private bool b1;

private bool b2;

private int i1;

}

public struct Bool1Long1

{

private bool b1;

private long l1;

}

public struct Bool1DateTime1

{

private bool b1;

private DateTime dt1;

}

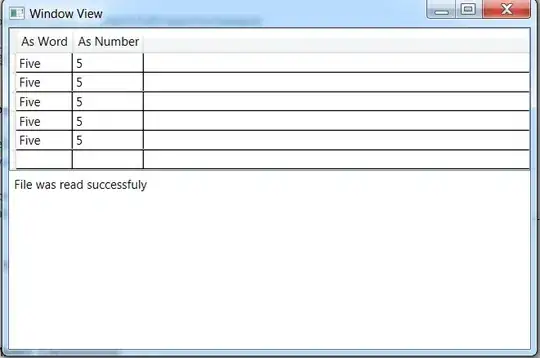

that gives the following output:

It seems that the order in which the fields are declared plays a role in the size of the structure.

I was expecting that Bool1Int1Bool1 returns a size of 6 (1 + 4 + 1) but it gives 12 instead (I suppose 4 + 4 + 4??) ! So it seems that the compiler is aligning the members by packing everyting by 4 bytes.

Does it change something if I'm on a 32 bits or 64 bits system?

And second question, for the test with the long type, the bool is packed by 8 bytes this time.

Who can explain this?