Is it possible that the error for my network decreases, then increase again? Just wanna check if i'm coding the right way.

2 Answers

Yes, it is possible for the error to temporarily increase. This is because you aren't testing one input and expected output all the time (and you shouldn't, because then the network will be specialized for that particular input-output set). The neural network does not implicitly "know" that it is going in the right direction. You are basically traversing the error surface to find a location where the error is below a certain threshold. So it is definitely possible for the error to increase in a particular epoch, but overall, your error rate should be decreasing as the backpropagation adjusts the weights in accordance with the error.

For example, assume that you are trying to create a neural network that can recognize digits. So you feed the net inputs for a "1" and the expected output. The output doesn't match, and so you adjust the weights. So this means that the net will have a lower error for recognizing a "1". But the next input could be a "4", and the error for a "4" could be greater and so the net will again adjust itself. The point is to find a "happy medium" of sorts for the weights such that they recognize the input and provide the appropriate output within a certain error threshold.

However, I am not sure what you mean by "decreases, and then increases until the last epoch". Are you only training for a certain number of epochs, or are you training until your network reaches a certain error-threshold?

- 94,126

- 40

- 223

- 295

-

I have finally figured out the error. And yes you're right that it temporarily increases then decreases as the program goes along the last epochs. Never mind the latter part of my question. Thanks for answering. – Rachelle Apr 24 '13 at 18:14

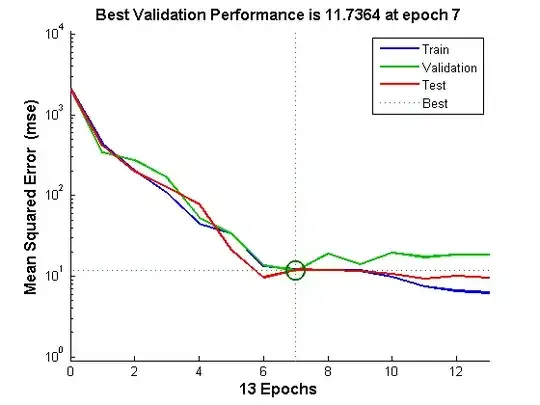

As a complement to Vivin Paliath's answer, here is how a typical training looks like:

Note that if the the network was trained with too large a learning rate, it might kill the training performance:

- 1

- 1

- 77,520

- 72

- 342

- 501